Computers, Monitors & Technology Solutions

NEW LATITUDE LAPTOPS

AI-Enhanced Productivity

Unlock next-level computing with AI PCs and Intel® Core™ Ultra processors.

SEASONAL TECH EVENT

Solutions for Every Season

Upgrade to our very latest with these special offers. Up to $750 off.

WELCOME TO NOW

Revive History With Generative AI

Discover how GenAI is preserving memories and helping to write new ones.

Dell Technologies Showcase

Featured Products and Solutions

NEW XPS LAPTOPS

Iconic Design. Now with AI.

Sleek laptops with Intel® Core™ Ultra processor and built-in AI – ready for every project.

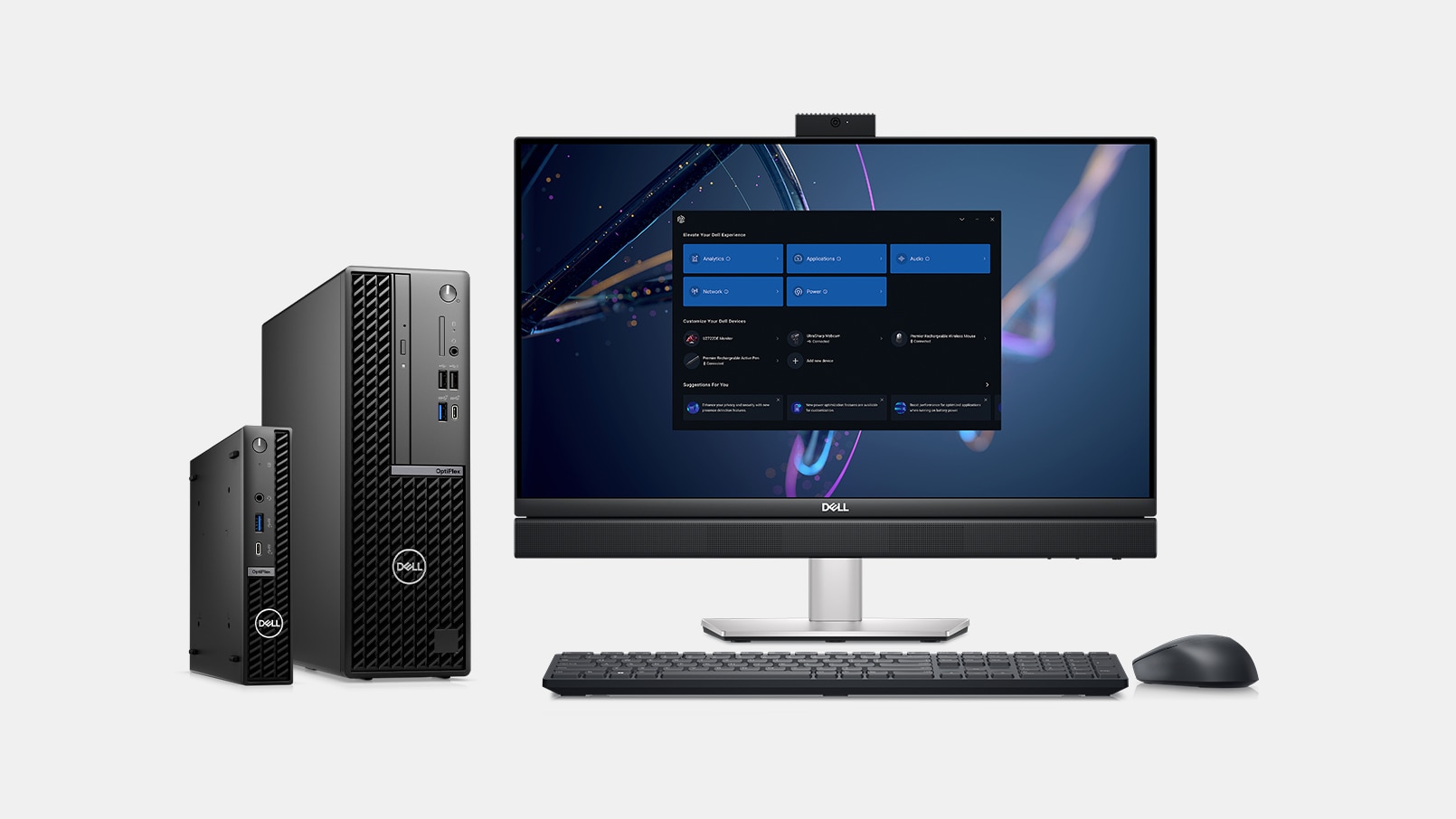

OPTIPLEX DESKTOP FAMILY

Intelligence Meets Simplicity

Engineered for reliable user experiences and simplified management.

ALIENWARE m16 R2

Make a Scene or Go Unseen

Stealth mode-ready and AI-enabled performance featuring up to Intel® Core™ Ultra 9.

SERVERS, STORAGE, NETWORKING

Flexible, Scalable IT Solutions

Power transformation with server, storage and network solutions that adapt and scale to your business needs.

WE'VE GOT YOU COVERED

We Have More Than Just PCs

Equip your home and workspace with the right accessories.

Dell Support

We're Here to Help

From offering expert advice to solving complex problems, we've got you covered.

My Account

Create a Dell account and enroll in Dell Rewards to unlock an array of special perks.

Easy Ordering

Order Tracking

Dell Rewards

Dell Premier

Leverage hands-on IT purchasing for your business with personalized product selection and easy ordering via our customizable online platform.

Simplify Purchasing

Discover Insights

Shop Securely

Latest from Dell Technologies

What's Happening

DELL REWARDS

Shop More. Earn More.