DataDomain: OS Upgrade Guide for High Availability (HA) systems

Summary: Process overview for Data Domain Operation System (DDOS) upgrades on Data Domain "Highly Available" (DDHA) appliances.

Instructions

HA system planned maintenance

In order to reduce planned maintenance downtime, system rolling upgrade is included in the HA architecture. A rolling upgrade may upgrade standby node first and then use an expected HA failover to move the services from active node to the standby node. At last, previous active nodes will be upgraded and rejoin HA cluster as standby node. All the processes are done in one command.

An alternative manual upgrade approach is “local upgrade”. Manually upgrade standby node first, and then manually upgrade active node. At last, standby node would rejoin HA cluster. Local upgrade can be performed either for regular upgrade or fixing issues.

All system upgrade operations on active node require data conversion may not start until both systems are upgraded to the same level and HA state is fully restored.

This KB should be checked before moving on with this procedure:

PowerProtect Data Domain: DDHA Upgrade Pre-Check

DDOS 5.7 onwards support two kinds of upgrade methods of HA systems:

-

Rolling upgrade - automatically upgrade both HA nodes with one command. Service is moved to the other node after upgrade.

-

Local upgrade - manually upgrade HA nodes one by one. Service is kept in the same node after upgrade.

Prepare the system for upgrade:

-

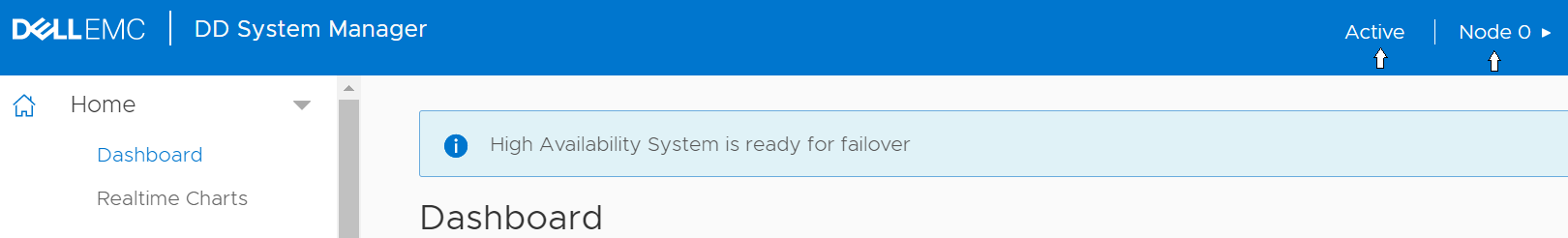

Please make sure HA system status is 'highly available'.

Login GUI -> Home -> Dashboard

- DDOS RPM file should be placed on active node and the upgrade should start from this node.

Login GUI -> Home -> Dashboard

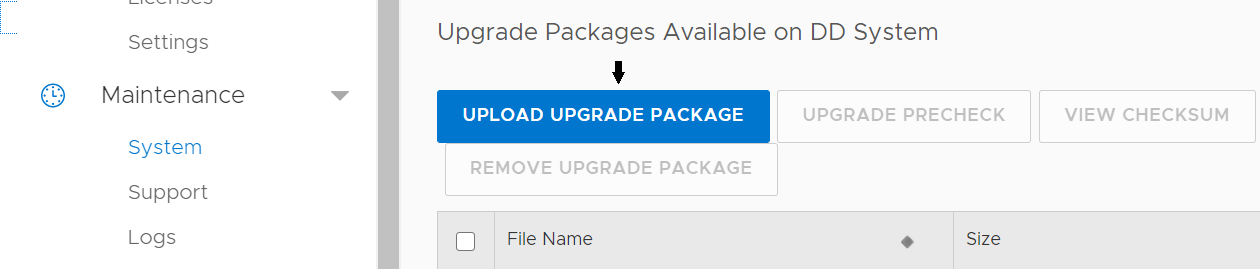

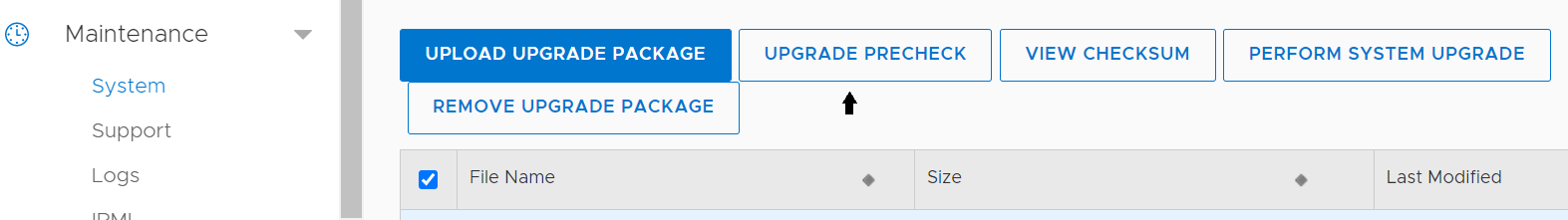

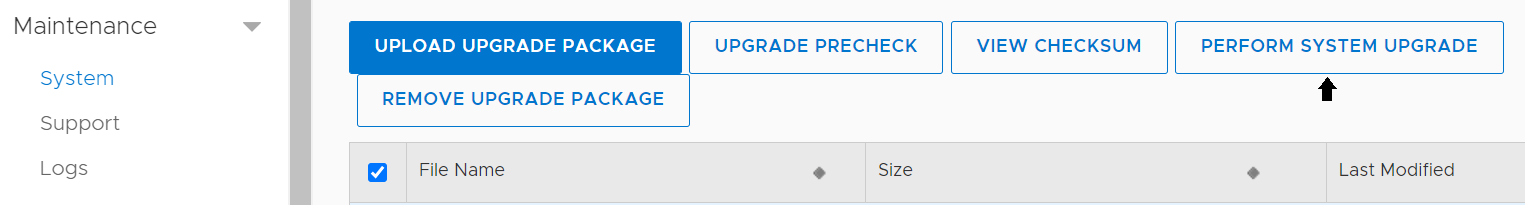

- Upload RPM file to active node

After upload, the RPM file will be listed.

- Please run precheck on active node. The upgrade should be aborted if encounter any error.

Please also shut down filesys cleaning, Data movement and replication before kicking off the upgrade (step #6) so that these jobs won’t lead to longer DDFS shutdown time during upgrade. Shorter DDFS shutdown time will help minimize impacts to clients. These workloads do not impact client backup/restore operations.

Based on needs, these services can be resumed after the upgrade has completed using the corresponding enable commands. Please refer to the administration guide for more details.

There are some other manual checks and commands are described in administration guide that are not strictly necessary for an HA system. Pre-reboot is currently suggested as a test for single node systems. It is not needed for HA systems because #5 “ha failover” below already includes an automatic reboot during the failover process.

- Optional. Before running rolling upgrade, it is recommended to do HA failover twice manually on active node. The purpose is to test failover functionality. The operation will make active node rebooted, please be aware of it.

First, prepare to failover by shut down cleaning, Data movement and replication. Please refer to administration guide to find out how to do it via GUI. These services do not impact client backup/restore workloads. Then proceed “ha failover”.

(When HA System status becomes ‘highly available’ again, please execute the second ‘ha failover’ and wait for both nodes become online)

After the HA failover, the stopped services can be resumed using the corresponding enable commands. Please refer to administration guide for more details.

The above failover tests are optional and do not have to be conducted right before upgrade. The failover tests could be conducted before upgrade, for example, two weeks, so that a smaller maintenance window can be used for the later upgrade. The DDFS service downtime for each failover is around 10mins (less or more depending on DDOS versions and some other factors). DDOS versions 7.4 and later will have less downtime release by release due to continuous DDOS SW enhancements.

- If precheck finished without any issues, proceed rolling upgrade on active node.

- Please wait for rolling upgrade finish. Before it, please do not trigger any HA failover operation.

DDFS availability during the above command:

-

It will upgrade the standby node first and reboot it to the new version. It takes roughly 20mins to 30mins depending on various factors. The DDFS service is up and operates on the active node during this period without any performance degradation

-

After the new DDOS is applied, the system will then failover the DDFS service to the upgraded standby node. It takes roughly 10mins (less or more depending on various factors).

-

One significant factor is disk enclosure (DAE) FW upgrade. It may introduce ~20mins more downtime depending on how many DAEs are configured. Please refer to the KB “Data Domain: HA Rolling upgrade may fail for external enclosure firmware upgraded”, to determine if a DAE FW upgrade is required. Note that starting with DDOS 7.5 there is an enhancement to enable online upgrade DAE FW, eliminating this concern.

-

Dell support may be contacted to discuss factors that could impact upgrade times. Depends on the client OS, application and the protocol between client and HA system, sometimes user may need to manually resume client workloads right after failover. For example, if with DDBoost clients and failover time is above 10mins, then client timeout and user needs to manually resume workloads. But there are usually tunable available on clients to set timeout values and retry times.

-

Note that the DDFS service is down during the failover period. By watching output of “filesys status” command on the upgraded node, one will know if the DDFS service is resumed or not. DDOS versions at 7.4 and later are expected to have less and less downtime due to enhancements to the DDOS code.

After the failover, the previously active node will be upgraded. After the upgrade is applied, it will reboot to the new version, and then rejoin HA cluster as the standby node. The DDFS service is not impacted during this process as it was already resumed above.

Verification:

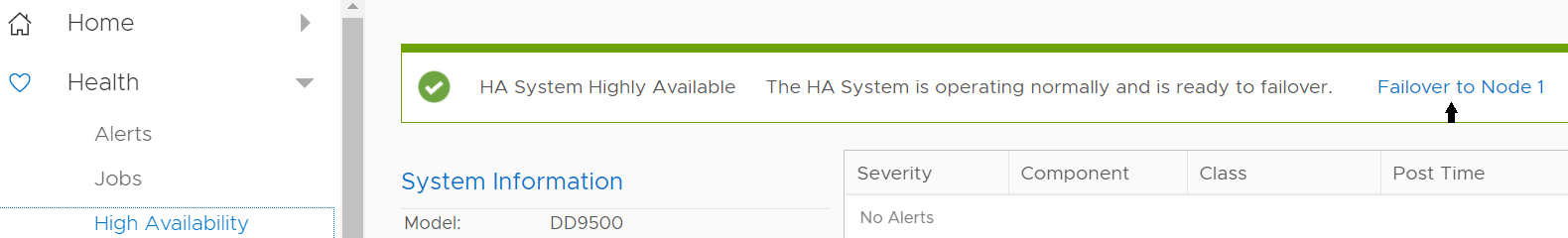

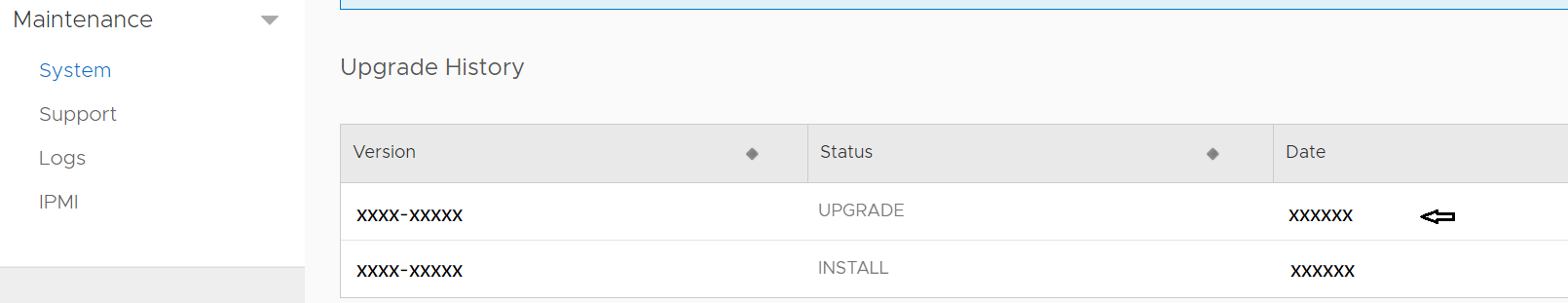

- After rolling upgrade finished, need login GUI via IP address of pre-standby node, in this case, it is node 1.

- Please check whether any unexpected alerts.

- At this point the Rolling upgrade has finished successfully.

Rolling upgrade via CLI:

Prepare the system for upgrade:

- Please make sure HA system status is ‘highly available’.

#ha status

HA System name: HA-system

HA System status: highly available <-

Node Name Node id Role HA State

----------------------------- ------- ------- --------

Node0 0 active online

Node1 1 standby online

----------------------------- ------- ------- --------

- DDOS RPM file should be placed on active node and the upgrade should start from this node.

#ha status

HA System name: HA-system

HA System status: highly available

Node Name Node id Role HA State

----------------------------- ------- ------- --------

Node0 0 active online Node0 is active node

Node1 1 standby online

----------------------------- ------- ------- --------

- Upload RPM file to active node

Client-server # scp <rpm file> sysadmin@HA-system.active_node:/ddr/var/releases/

Password: (customer defined it.)

(From client server, target path is “/ddr/var/releases”)

You might need the -O option to get scp to work

Active-node # system package list

File Size (KiB) Type Class Name Version ------------------ ---------- ------ ---------- ----- ------- x.x.x.x-12345.rpm 2927007.3 System Production DD OS x.x.x.x ------------------ ---------- ------ ---------- ----- -------

- Please run precheck on active node. The upgrade should be aborted if encounter any error.

Active-node # system upgrade precheck <rpm file>

Upgrade precheck in progress:

Node 0: phase 1/1 (Precheck 100%) , Node 1: phase 1/1 (Precheck 100%)

Upgrade precheck found no issues.

Please also shut down GC, Data movement and replication before kicking off the upgrade (step #6) so that these jobs won’t lead to longer DDFS shutdown time during upgrade. Shorter DDFS shutdown time will help minimize impacts to clients. These workloads do not impact client backup/restore operations. Based on needs, these services can be resumed after the upgrade has completed using the corresponding enable commands. Please refer to the administration guide for more details. Active-node # filesys clean stop Active-node # cloud clean stop Active-node # replication disable all

Note that there are a few “watch” commands to check if the above operations are done.

Active-node # filesys clean watch

Active-node # cloud clean watch

There are some other manual checks and commands are described in administration guide that are not strictly necessary for an HA system. Pre-reboot is currently suggested as a test for single node systems. It is not needed for HA systems because #5 “ha failover” below already includes an automatic reboot during the failover process.

- Optional. Before running rolling upgrade, it is recommended to do HA failover twice manually on active node. The purpose is to test failover functionality. The operation will make active node rebooted, please be aware of it.

First, prepare to failover by disabling GC, data movement and replication. These services do not impact client backup/restore workloads. Then run “ha failover”.

The commands to do this are as follows:

Active-node # filesys clean stop

Active-node # cloud clean stop

Active-node # replication disable all

Note that there are a few "watch" commands to check if the above operations are done.

Active-node # filesys clean watch

Active-node # cloud clean watch

And then run failover command:

Active-node # ha failoverThis operation will initiate a failover from this node. The local node will reboot.

Do you want to proceed? (yes|no) [no]: yes

Failover operation initiated. Run 'ha status' to monitor the status

(When HA System status becomes ‘highly available’ again, please execute the second ‘ha failover’ and wait for both nodes become online)

After the HA failover, the stopped services can be resumed using the corresponding enable commands. Please refer to administration guide for more details.

The above failover test are optional and do not have to be conducted right before upgrade. The failover tests could be conducted before upgrade, for example, two weeks, so that a smaller maintenance window can be used for the later upgrade. The DDFS service downtime for each failover is around 10 mins (less or more depending on DDOS versions and some other factors). DDOS version 7.4 and later will have less downtime release by release due to continuous DDOS SW enhancements.

- If precheck finished without any issues, proceed rolling upgrade on active node.

Active-node # system upgrade start <rpm file> The 'system upgrade' command upgrades the Data Domain OS. File access

is interrupted during the upgrade. The system reboots automatically

after the upgrade.

Are you sure? (yes|no) [no]: yes ok, proceeding. Upgrade in progress: Node Severity Issue Solution ---- -------- ------------------------------ -------- 0 WARNING 1 component precheck script(s) failed to complete 0 INFO Upgrade time est: 60 mins 1 WARNING 1 component precheck script(s) failed to complete 1 INFO Upgrade time est: 80 mins ---- -------- ------------------------------ -------- Node 0: phase 2/4 (Install 0%) , Node 1: phase 1/4 (Precheck 100%) Upgrade phase status legend: DU : Data Upgrade FO : Failover .. PC : Peer Confirmation VA : Volume Assembly Node 0: phase 3/4 (Reboot 0%) , Node 1: phase 4/4 (Finalize 5%) FO Upgrade has started. System will reboot.

DDFS availability during the above command:

-

It will upgrade the standby node first and reboot it to the new version. It takes roughly 20mins to 30mins depending on various factors. The DDFS service is up and operates on the active node during this period without any performance degradation.

-

After the new DDOS is applied, the system will then failover the DDFS service to the upgraded standby node. It takes roughly 10mins (less or more depending on various factors).

-

One significant factor is disk enclosure (DAE) firmware upgrade. It may introduce ~20mins more downtime depending on how many DAEs are configured. Please refer to the KB “Data Domain: HA Rolling upgrade may fail for external enclosure firmware upgraded”, to determine if a DAE FW upgrade is required. Note that starting with DDOS 7.5 there is an enhancement to enable online upgrade DAE FW, eliminating this concern.

-

Dell support may be contacted to discuss factors that could impact upgrade times. Depends on the client OS, application and the protocol between client and HA system, sometimes user may need to manually resume client workloads right after failover. For example, if with DDBoost clients and failover time is above 10mins, then client timeout and user needs to manually resume workloads. But there are usually tunables available on clients to set timeout values and retry times.

-

-

After the failover, the previously active node will be upgraded. After the upgrade is applied, it will reboot to the new version, and then rejoin HA cluster as the standby node. The DDFS service is not impacted during this process as it was already resumed in above.

- After standby node (node1) rebooted and becomes accessible, it is possible to login to standby node to monitor the upgrade status/progress.

Node1 # system upgrade status

Current Upgrade Status: DD OS upgrade In Progress

Node 0: phase 3/4 (Reboot 0%)

Node 1: phase 4/4 (Finalize 100%) waiting for peer confirmation

- Please wait for rolling upgrade finish. Before it, please do not trigger any HA failover operation.

Node1 # system upgrade status

Current Upgrade Status: DD OS upgrade Succeeded

End time: 20xx.xx.xx:xx:xx

- Please check the HA status, both nodes are online, HA system status is ‘highly available’.

Node1 # ha status detailed

HA System name: HA-system

HA System Status: highly available

Interconnect Status: ok

Primary Heartbeat Status: ok

External LAN Heartbeat Status: ok

Hardware compatibility check: ok

Software Version Check: ok

Node Node1:

Role: active

HA State: online

Node Health: ok

Node Node0:

Role: standby

HA State: online

Node Health: ok

Mirroring Status:

Component Name Status

-------------- ------

nvram ok

registry ok

sms ok

ddboost ok

cifs ok

-------------- ------

Verification:

- Please check both nodes have same DDOS version.

Node1 # system show version

Data Domain OS x.x.x.x-12345

Node0 # system show version

Data Domain OS x.x.x.x-12345

- Please check whether any unexpected alerts.

Node1 # alert show current

Node0 # alert show current

- At this point the Rolling upgrade has finished successfully.

Note: If you face any issue with the upgrade, Please contact Data Domain Support for further instructions and support.

LOCAL UPGRADE for DDHA Pair:

A local upgrade broadly functions as follows:

Prepare the system for upgrade:

- Check HA system status. Even the status is degraded, local upgrade can work on this situation.

#ha status HA System name: HA-system HA System status: highly available <- Node Name Node id Role HA State ----------------------------- ------- ------- -------- Node0 0 active online Node1 1 standby online ----------------------------- ------- ------- --------

- DDOS RPM file should be placed on both nodes and the upgrade should start from standby node.

#ha status

HA System name: HA-system

HA System status: highly available

Node Name Node id Role HA State

----------------------------- ------- ------- --------

Node0 0 active online

Node1 1 standby online <- Node1 is standby node

----------------------------- ------- ------- --------

- Upload RPM file to both nodes.

Client-server # scp <rpm file> sysadmin@HA- system.active_node:/ddr/var/releases/

Client-server # scp <rpm file> sysadmin@HA-system.standby_node:/ddr/var/releases/

Password: (customer defined it.)

You might need the -O option to get scp to work(From client server, target path is “/ddr/var/releases”)

Active-node # system package list File Size (KiB) Type Class Name Version ------------------ ---------- ------ ---------- ----- ------- x.x.x.x-12345.rpm 2927007.3 System Production DD OS x.x.x.x ------------------ ---------- ------ ---------- ----- ------ Standby-node # system package list File Size (KiB) Type Class Name Version ------------------ ---------- ------ ---------- ----- ------- x.x.x.x-12345.rpm 2927007.3 System Production DD OS x.x.x.x ------------------ ---------- ------ ---------- ----- ------

- Please run precheck on active node if HA status is ‘highly available’. The upgrade should be aborted if encounter any error.

Active-node # system upgrade precheck <rpm file>

Upgrade precheck in progress: Node 0: phase 1/1 (Precheck 100%) , Node 1: phase 1/1 (Precheck 100%) Upgrade precheck found no issues.

If HA status is "degraded", need do precheck on both nodes.

Active-node # system upgrade precheck <rpm file> local

Upgrade precheck in progress:

Node 0: phase 1/1 (Precheck 100%)

Upgrade precheck found no issues.

Standby-node # system upgrade precheck <rpm file> local

Upgrade precheck in progress:

Node 1: phase 1/1 (Precheck 100%)

Upgrade precheck found no issues.

- Take the standby node offline.

Standby-node # ha offline

This operation will cause the ha system to no longer be highly available.

Do you want to proceed? (yes|no) [no]: yes

Standby node is now offline.

(NOTE: If offline operation failed or ha status is degraded, please continue the local upgrade because later steps may handle failures.)

- Make sure standby node status is offline.

Standby-node # ha status

HA System name: HA-system

HA System status: degraded

Node Name Node id Role HA State

----------------------------- ------- ------- --------

Node1 1 standby offline

Node0 0 active degraded

----------------------------- ------- ------- --------

- Perform the upgrade on the standby node. This operation will invoke standby node reboot.

The 'system upgrade' command upgrades the Data Domain OS. File access

is interrupted during the upgrade. The system reboots automatically

after the upgrade.

Are you sure? (yes|no) [no]: yes

ok, proceeding.

The 'local' flag is highly disruptive to HA systems and should be used only as a repair operation.

Are you sure? (yes|no) [no]: yes

ok, proceeding.

Upgrade in progress:

Node 1: phase 3/4 (Reboot 0%)

Upgrade has started. System will reboot.

- The standby node will reboot into the new version of DDOS but stay offline.

- Please check system upgrade status, it might take more than 30 mins to finish OS upgrade.

Standby-node # system upgrade status

Current Upgrade Status: DD OS upgrade Succeeded

End time: 20xx.xx.xx:xx:xx

- Please check HA system status, standby node (in this case, it is node1) is offline, HA status is ‘degraded’.

Standby-node # ha status

HA System name: HA-system

HA System status: degraded

Node Name Node id Role HA State

----------------------------- ------- ------- --------

Node1 1 standby offline

Node0 0 active degraded

----------------------------- ------- ------- --------

- Perform the local upgrade on the active node. This operation will reboot active node.

Active-node # system upgrade start <rpm file> local

The 'system upgrade' command upgrades the Data Domain OS. File access

is interrupted during the upgrade. The system reboots automatically

after the upgrade.

Are you sure? (yes|no) [no]: yes

ok, proceeding.

The 'local' flag is highly disruptive to HA systems and should be used only as a repair operation.

Are you sure? (yes|no) [no]: yes

ok, proceeding.

Upgrade in progress:

Node Severity Issue Solution

---- -------- ------------------------------ --------

0 WARNING 1 component precheck

script(s) failed to complete

0 INFO Upgrade time est: 60 mins

---- -------- ------------------------------ --------

Node 0: phase 3/4 (Reboot 0%)

Upgrade has started. System will reboot.

- Please check system upgrade status, it might take more than 30 mins to finish OS upgrade.

Active-node # system upgrade status

Current Upgrade Status: DD OS upgrade Succeeded

End time: 20xx.xx.xx:xx:xx

- After active node upgrade finished, the HA system status still is degraded. Execute the following command to make standby node online, it will reboot standby node.

Standby-node # ha online The operation will reboot this node. Do you want to proceed? (yes|no) [no]: yes Broadcast message from root (Wed Oct 14 22:38:53 2020): The system is going down for reboot NOW! **** Error communicating with management service.(NOTE: If ‘ha offline’ was not run at previous steps, please ignore this step)

- The standby node will reboot and rejoin the cluster. After it, HA status will become ‘highly available’ again.

Active-node # ha status detailed

HA System name: Ha-system

HA System Status: highly available

Interconnect Status: ok

Primary Heartbeat Status: ok

External LAN Heartbeat Status: ok

Hardware compatibility check: ok

Software Version Check: ok

Node node0:

Role: active

HA State: online

Node Health: ok

Node node1:

Role: standby

HA State: online

Node Health: ok

Mirroring Status:

Component Name Status

-------------- ------

nvram ok

registry ok

sms ok

ddboost ok

cifs ok

-------------- ------

Verification:

- Please check both nodes have same DDOS version.

Node1 # system show version

Data Domain OS x.x.x.x-12345

Node0 # system show version

Data Domain OS x.x.x.x-12345

- Please check whether any unexpected alerts.

Node1 # alert show current

Node0 # alert show current

- At this point the Rolling upgrade has finished successfully.

Additional Information

Rolling upgrade:

-

Note that a single failover is performed during upgrade so roles will swap

-

Upgrade information continues to be held in infra.log but there may be additional information in ha.log

-

Upgrade progress can be monitored via system upgrade watch

Local node upgrade:

-

A local node upgrade does not perform HA failover

-

As a result, it will be an extended period of downtime whilst the active node upgrades/reboots/performs post reboot upgrade activities which will likely cause backups/restores to time out and fail. Require allocating a maintenance time window for local upgrade.

-

Even HA system status is ‘degraded’, local upgrade can be proceeded.

-

For some reason, rolling upgrade may failed unexpected. Local upgrade can be considered as a fix method in this situation.