By Kathryne Nave, contributor, Dell Technologies

Few social routines are as fraught as the handshake. Nailing that firm-but-friendly balance of force is tricky enough at the best of times. For the prosthetics user, it can feel like a stab in the dark.

“We don’t often appreciate it, but the human hand is a remarkable sensory organ,” says Robert Gaunt, associate professor of physical medicine and rehabilitation at the University of Pittsburgh. “ Without this, it becomes incredibly difficult to pick up and manipulate objects. Or, you think about typing on a keyboard, most of us depend upon the sense of touch rather than actually looking at the keys.”

Using an electrode plate that can be implanted directly and permanently into an individual’s brain, called the Utah Electrode Array [UEA], Gaunt is working on restoring that sense of touch to users of prosthetic limbs.

“A Utah Array looks like a tiny bed of nails, around four millimeters across, where each nail is only about a millimeter long and approximately the width of a human hair,” Gaunt explains. “This is punched into the brain with a small air-powered hammer, allowing us to access neurons about a millimeter deep—which is where some of the most important neurons we want to access are.”

Mind-controlled prosthetics

The Utah Electrode Array (UEA) is not a new technology. Developed in 1992 by University of Utah Bioengineering Professor Richard A. Normann, it was the first microelectrode array to get FDA approval for implantation in humans, and Gaunt estimates that around 30 people worldwide have since had it implanted on a long-term basis.

In most of these cases, the array has been used to detect neural activity to translate it into motor-control signals that allow the user to control the movements of a robotic arm with their thoughts alone.

“With these implanted electrodes, we can listen to the electrical pulses of individual neurons,” Gaunt says. “It’s like a series of rapid bursts or popping sounds, and if you ask a person to imagine moving their hand to the right, some of those neurons will start firing much faster than others. Eventually, you can build up a model to decode those different patterns of activity and turn them into the motion of a robot or a computer cursor on the screen.”

Unlike commercially available myoelectric prosthetics that depend on registering the movement of a person’s residual muscles, the UEA reads directly from the brain and facilitates finer-grained motor control, even for those with complete upper spinal cord damage.

In 2012, Gaunt’s colleague Jennifer Collinger, associate professor and research operations director at the University of Pittsburgh, showed that a person implanted with a UEA in their motor cortex could control a prosthetic arm with just a few weeks of training, achieving a success rate of 91.6% when reaching for targets.

You’ve got to feel it to perceive it

Despite the impressive results in decoding movement intentions, Gaunt realized that the advantage of this finer-grained control is limited by the lack of sensory feedback, which people ordinarily depend upon to inform how they want to move. That sensory feedback is so crucial, he points out, that in some rare cases, a person can be rendered paralyzed by damage to their sense of touch alone, even where the parts of their brain responsible for controlling movement are fully functional.

So, in 2015, Gaunt and Collinger decided to implant UEAs into the part of the somatosensory cortex that processes manual touch and try sending electrical signals in the opposite direction—stimulating the neurons rather than recording from them.

We know that the sensory regions of the brain are actually laid out in these fairly clear maps, so there’ll be a spot corresponding to the index fingertip, and that’ll be right next to another spot for your middle fingertip, and so on.

—Robert Gaunt, associate professor of physical medicine and rehabilitation, University of Pittsburgh

“We essentially pick an electrode at random, send a burst of electricity to activate it, then ask people if they feel something, and where they feel it,” Gaunt explains. “We know that the sensory regions of the brain are actually laid out in these fairly clear maps, so there’ll be a spot corresponding to the index fingertip, and that’ll be right next to another spot for your middle fingertip, and so on.”

Gaunt and Collinger have worked with three participants so far, one of whom, Nathan Copeland, was paralyzed in all four limbs by a car accident in 2004, when he was eighteen years old.

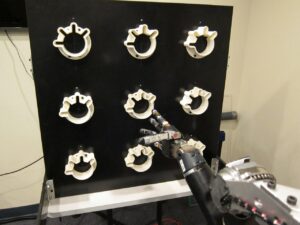

When the two arrays in Copeland’s somatosensory cortex were hooked up to pressure sensors on a robot arm, he was able to discriminate which of the arm’s fingers were being touched with an 84% accuracy rate. In May 2021, Gaunt’s team showed that this sensory feedback could enable Copeland to pick up an object and move it to its target in about half the time when compared to using sight alone.

“When I look at an object, I can see that the hand is touching it, but I can’t tell if that’s a firm grasp or just barely touching,” Copeland says. “So sometimes when I’d try to lift the object, it would fall straight out of the hand. Having that extra tactile feedback lets me know if I’ve got a solid grasp on the object, and it lets me move on to picking it up and moving it around immediately, without having to spend the extra time testing whether it’s going to drop out of the hand or not.”

Moving beyond the laboratory

While the sense of touch provided by this brain-machine interface may offer significant practical improvements to a person’s ability to control a limb, it still doesn’t feel quite like normal touch.

“The sensations are pretty straightforward and intuitive, but it usually feels like pressure, tingles or tapping, more at the base of my fingers,” Copeland describes. “I have to mark it on a scale from natural to completely unnatural, and almost every time, I’d say it’s right in the middle of that scale—but that doesn’t stop the information from being useful.”

Gaunt’s team is currently working on better mimicking the brain’s own tactile code to create patterns of electrical activity that feel more like ordinary touch, and Copeland describes how one day, the settings they used made him stop and look at his own hand because the sensation felt so exactly like someone poking at his own index finger.

Still, the motivation that drove Copeland to both sign up for elective brain surgery and commit to spending three days a week at the lab for the foreseeable future is not just to make this technology better but to make it available to the people who need it.

When you have these kinds of catastrophic injuries, there’s a period of despair where you feel like your life has changed, and you’re not going to be able to contribute to society. So I just wanted to help push the science forward.

—Nathan Copeland, patient

“The number of people who meet the criteria for these studies and who live in the right area is so small, so I kind of felt that, since I could help, how could I not,” Copeland says. “When you have these kinds of catastrophic injuries, there’s a period of despair where you feel like your life has changed, and you’re not going to be able to contribute to society. So I just wanted to help push the science forward so that they’ll be able to tell the next generation of people in a similar situation, ‘Look, there is this technology that’s available now, it’s covered by insurance, and it can give you some of these opportunities back.'”

For Copeland, those opportunities have included playing video games with his thoughts, fist-bumping President Obama, traveling to France and Japan to speak at conferences, taking up artwork, and auctioning one of his drawings for $120,000 to raise money for mental health nonprofit the AURORA Institute.

“I remember when I joined the study, there was a 23-page consent form which says, in bold font, ‘You will not receive any benefits from participating in this study,'” he recalls. “What that means is that I don’t get to keep the robotic arm or anything. But I’ve made friends with people in the lab, and it’s given me a reason to get up and out of the house each day. I’ve started selling my art, and I’m looking at getting more work in public speaking, whereas before, I wouldn’t have had the confidence to be able to speak to a room of hundreds of people. So the way I see it, I’ve had a tremendous benefit from taking part in this work.”

Lead image of Nathan Copeland courtesy of UPMC/Pitt Health Sciences.