Unsolved

This post is more than 5 years old

1 Rookie

•

1 Message

0

1611

January 2nd, 2020 04:00

Upgrading PSU in Precision T7810 Workstation (to a 1300W PSU?)

I recently purchased a T7810 barebones workstation. It came with the smaller 675W PSU and I would like to upgrade it. I have purchased two E5-2643-v3 Xeon processors (6 core, 12 thread, 3.4 GHz, 135W) and I have one GeForce 690 (pulls a hefty 300W) and I would like to add a second one. And eventually, I would like to upgrade to two Nvidea Mars 295x2 cards. I also am building a RAID 5 with 4 (or more if I can make then fit) 2TB drives. Long story short, I need a lot of juice. Now I have a 1300W PSU that I am fairly positive came from a Precision T7910 workstation. Dell part number D1300EF-01. It appears as though it fits just fine in the case. But does anyone know if it is actually compatible with the T7810 system? I know that they make an "approved" 825W PSU, but I already own the D1300EF-01 and even second hand "refurbished" 825W PSUs on eBay are pretty expensive. If anyone could help I would greatly appreciate it. Thanks in advance!!!

Hekyl

And on a similar topic; does anyone know where you can find a list about "compatible" or replacement parts for a given Dell system? Their tech support is completely useless if you don't have an active warranty, and accurate/helpful info isn't exactly easy to come by... Thanks again!

nonolondon

1 Rookie

•

17 Posts

0

June 24th, 2025 09:12

Hey there, did you ever got some answers? I am facing similar issue where I woudl like to upgrde from 825 PSU to a 1300w but wonder what's compatible or not.. Thanks

Cyberato

1 Rookie

•

19 Posts

0

June 26th, 2025 10:57

@nonolondon

Hi there, Yes...I finally found the right PSU for my T7810 and it fits perfectly and it's working great, it now has 256gb of ram with two Gigabyte RTX 3060 12gb with two 4tb SSD's and a 10gb nic card and it's running great. since those GPU uses 8 pin and draws 170w each, I have had no issues with power. The PSU is the T31JM, I picked up from Alta Technologies, Inc. If you have any questions, please let me know. Later :)

nonolondon

1 Rookie

•

17 Posts

0

June 26th, 2025 14:31

Thanks so my understanding is that the H1300EF-02 works but not the H1300EF-01. Basically the oen that have the mention "T31JM" like you said and was mentioned in another post..

On a side note, I am trying to put in place a similar setup, but using second hands 3090s, not sure if 2 will be possible regarding airflow and power. Thing is that they don't come cheap! Best price I have so far is about 150USD equivalent (am in europe)

Thank for your answer

best

Cyberato

1 Rookie

•

19 Posts

0

June 26th, 2025 20:22

@nonolondon

The RTX 3090 uses a 12-pin power connector and can use 350w up to 450w. also it will run more hotter in a enclosed case like T7810. If you plan to use two 3090's the 1300w PSU, probably is not going to work. Plus the T7810 comes with 6 pin connectors, you can use 6 to 8 pin connectors, but you will need to use 2x6 pins connctions for just one 8 pin connector. If you are running 2x8 pins on 2x6 pins it may heat up and melt the connector if it draws to much power or it will not post or randomly shutdown. Unless you replace all of the cables to get it to work. either way you can find some GPUs with 8 pins that draws less power and runs cooler and will run more stable. If you are thinking of running an AI machine or or a bit mining rig you should check-out the links below about the Intel Arc Pro B60, I'm considering it if does what they say it does and if the price is right. I'm currently running Proxmox hypervisor on my T7810, running multiple vm's and AI. I have tested up to 22b AI models with Automactic1111 in webui running in docker and it performs really well. You know that the Nvidia RTX 5060TI has 16gb and uses one 8 pin connection and uses about 180w of power. Sorry about the information overload..!

Intel Arc Pro B60

https://youtu.be/vZupIBqKHqM

https://youtu.be/T-44sKtweH8

Cyberato

1 Rookie

•

19 Posts

0

June 26th, 2025 20:39

@nonolondon

Hey, If your interested I saw Genuine Dell Precision 1300W Power Supply H1300EF-02 T31JM US $79.99 or Best Offer on Ebay.

https://www.ebay.com/itm/127182861354?chn=ps&norover=1&mkevt=1&mkrid=711-117182-37290-0&mkcid=2&mkscid=101&itemid=127182861354&targetid=2299003535995&device=c&mktype=pla&googleloc=9026814&poi=&campaignid=21203633013&mkgroupid=162035688435&rlsatarget=pla-2299003535995&abcId=9407526&merchantid=6363624&gad_source=1&gad_campaignid=21203633013&gbraid=0AAAAAD_QDh9SMr0NzqGz3cY-EYC2afFKy&gclid=EAIaIQobChMIxPOh6PaPjgMVhyZECB0HVzBDEAQYAyABEgIm0_D_BwE

nonolondon

1 Rookie

•

17 Posts

0

June 27th, 2025 08:44

@Cyberato thanks but apparently the seller does not ship to Paris... Another layer of complexity

nonolondon

1 Rookie

•

17 Posts

0

June 27th, 2025 09:00

@Cyberato yes agreed, I am planning to use it for ai: ollama/open-webui. If power drainage issue, I was thinking to power the 2nd one with an external PSU (hugly but problem solved cheaply).

I will connect the 2 cards withan NVLink and the idea is to increase the total VRAM capacity. I started with 12Go VRAM cards but was limited with models. Out of interest do you manage to run Flux? I dropped Automatic111 for the benefit to ComfyUI (I felt like more models/libs were supported), but maybe I was wrong.

I don't know about Poxmox, but will take a look.

Intel cards might be interesting, but since i am toddling my way around in AI, it seems like it may intriduce another layer of complexity regarding drivers compatibility with pytoch/tensorflow libs.

IU manged to get my hand on RTX 3090 turbo which has a similar design that pushes air outside the ATX, and have a small factor design...

Cyberato

1 Rookie

•

19 Posts

0

June 28th, 2025 02:59

@nonolondon

Yes, The RTX 3090 Turbo is a beast of a card, I've seen it on Ebay still a little pricey, but I'm opting for quantity as a budget alternative considering the cost of GPUs and power consumption. Right now, I've got ollama /open-webui running Kokoro and using Automatic1111 for image generation and it's running pretty good. BTW, have you ever heard of vLLM Distributed Inference using RAY vLLM? I'm working on another rig with two AMD 9070's I picked up and will be trying to build a vLLM Distributed Inference setup. Gonna give it a shot and see what happens. Here's a link to my open webui: https://ai.6-tos.com/

Let me know how your setup goes.

Cyberato

1 Rookie

•

19 Posts

0

June 30th, 2025 23:20

@nonolondon

My current setup for the T7810. In case anybody wants to know.

BTW. I was able to run "mistral-small3.2:24b-instruct-2506-q4_K_M"

it ran pretty good, so I guess quantitatized models are good for budget GPUs in VM.

https://t7810.6-tos.com/

nonolondon

1 Rookie

•

17 Posts

0

July 1st, 2025 09:08

Great to see you even managed to put 2 CPU at 120W TDP, my system is getting hot 2x105TDP. Out of interest what temp do you get ?

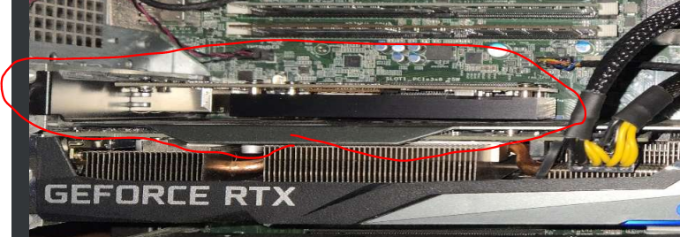

Out of curiosity what is this in your system (in red). Another K600 Nvidia card?

Thanks for all the sharing very useful and encouraging to see that others have already done what i am trying to achieve!

@Cyberato

nonolondon

1 Rookie

•

17 Posts

0

July 1st, 2025 09:15

@Cyberato I couldnt login anymore but last I tried, it was working very well. I defintely should look into proxmox. do you have a paying version? Do you run it on Docker on a Ubuntu System?

tx for sharing

Cyberato

1 Rookie

•

19 Posts

0

July 1st, 2025 22:12

@nonolondon

Sorry about that, I was performing some maintenance on my Proxmox server. It's now up and running now. (see details in link)

https://t7810.6-tos.com/

Cyberato

1 Rookie

•

19 Posts

0

July 1st, 2025 22:24

@nonolondon

I forgot to tell you, I'm am not using a paid version and you don't need a license or a paid subscription to use it. it's a fully functional Hypervisor, unless you want to have support and want enterprise packages. But it works very very well. I believe many tech guys are using it.

nonolondon

1 Rookie

•

17 Posts

0

July 12th, 2025 17:32

yeha got to your site the other day and saw it was working very well. I am gonna try to give it a go. As for now it displays the setup and machines that it is running wich is very interesting. on mly side i have received a couple of 3090 turbos, and te 1300w alim. tahnks for sharing

Cyberato

1 Rookie

•

19 Posts

0

July 12th, 2025 20:34

@nonolondon

Hey, that's great news. Please let me know once it's up and running so you can share your experience with these 3090s on your system. I've been thinking of upgrading my 3060s to RTX Titan's gpu cards myself, Even thpugh they are pretty old but they do have 24GB and 4,806 cuda cores and they are currently cheaper than the 3090s, but less powerfull. If I can find two at a good price of either models, they probably work in my setup, but I would need to somehow supply power to them with an additional PSU. I also had a though about that, I could buy some pcie risers and run a long pcie riser cables and add addition pcie extenders to mother pcie and connect it like a mining rig and add serveral gpus. Well it's just a thought, I would have to do research and see if this crazy idea of mine would work before spending any $$$ on it.

I've also looked into the 5060 TI with 16GB memory, which also has 4,806 cores cores and has one 8-pin connector. This might work well too, but I will need to do some research on this idea too. BTW, if you don't mind me asking, about how much these 3090s cost? Oh, I also manage to run the mistral-small3.2:24b-instruct-2506-q4_K_M. It rans ok and I was still able to use the image generator as well. It responded to the queries faster than I can read..LOL Well I'll catch you later :)