This post is more than 5 years old

33 Posts

0

1592

E20-324 VideoILT Module 5 - Scenario 1 & 2 solutions missing

Hi,

I search the entire CD and can't find the solutions for scenario 1 - snapshot and scenario 2 - clone questions in Module 5.

Appreciate if any instructor can post the solutions. Someone has posted their own solutions in the past but I am not sure if the answers are correct.

Thank you.

andre_rossouw

62 Posts

0

June 20th, 2013 10:00

You have the method correct. Remember, though, that COFWs now perform 4 writes to the RL, so for LUN 1 you'd see 125 writes x 4 = 400 writes/s. For a R5 LUN, that's 400 x 4 = 1600 disk IOPs/150 = 10.7 disks. At this point you could decide that 10 disks would be OK [which is probably the case], or round up to the next available value for R5 4+1, which is 15 disks.

For LUN 2, you'd get 125/16 x 4 x 2 = 63 IOPs = 2 disks [you'd have to round up].

For LUN 3, you'd see 80 x 4 x 4 = 1280 disk IOPs/90 = 14.2 = 15 disks.

Yes, the host R/W ratio matters, because only host writes cause COFWs, so you need to first determine how many writes/s there are.

andre_rossouw

62 Posts

0

June 20th, 2013 08:00

Post your answers to the questions and I'll let you know if they're correct.

paul881

33 Posts

0

June 20th, 2013 10:00

Hi Andre,

I will work on Scenario 1- Snapshot first

LUN 1 : 500 random 4 kib IOPs , 3:1 R/W

( 500 / 4 ) * 3 ( 3 writes to the RLP ) = 375 IOPs

# of R5 10K SAS disks :

( read i/o + ( write i/o * wp ) / disk iops

( 0 + ( 375 * 4 ) / 150 ( for sas 10k) = 10 drives

=======================================================

LUN 2 : 500 sequential 4 kib IOPs, 3:1 R/W

( 500 /4 ) = 125 , ( 125 / ( 64/4 kib) ) * 3 ( 3 writes to the RLP ) = 23.43 = 24 IOPs

# of R1/0 10K SAS

( 0 + ( 24 IOPs * 2 ) / 150 = 0.32 = 2 drives

============================================================

LUN 3 : 400 Random 8 kib IOPS , 4:1 R/W

( 400 / 8 ) * 3 = 150 IOPs

# of R5 7.2K NL-SAS

(0 + ( 150 * 4) / 90 = 7 disks

==================================================================

One big question :

Does the R/W ratio change anything, I am not sure how to take that into consideration when doing the calculations ?

On LUN 3 it is 4:1 R/W

Thank you for your feedback.

paul881

33 Posts

0

June 20th, 2013 11:00

Thanks for your reply . sorry, please explain further.

For LUN 1 , it is (500/4) * 4 writes = 400 writes/s. 4 writes is for the RLP right ?

For the source LUN is it 1 read and 3 writes ?

For LUN 2, I am confused with sequential.

So it is 125 / 16 x 4 x 2 , what is 16, 4 and 2 ? ( if 2 is the WP ) , what is 16 and 4 then ?

For LUN 3, 80 x 4 x 4 - ( if 4 is the WP ) what is the 80 ? , what is the second number 4 ?

How do I factor in the R/W ratio into my calculations ? Given that LUN 1, 2 is 3:1 and LUN 3 is 4:1

Thank you.

andre_rossouw

62 Posts

0

June 20th, 2013 12:00

I made a mistake - for LUN 1 you'd see 125 x 4 = 500 writes/s into the RL. The Source LUN would see 1 write from the host, plus a 64 kB chunk read for the COFW.

For sequential I/O, a number of writes will occur before the next chunk is touched. The number of writes depends on the size of the chunk - fixed at 64 kB - and the size of the I/O - 4 kB in this case. The first write touches a virgin chunk, so causes a COFW. When the next 4 kB write arrives, it's no longer the first write, because the chunk has been touched already. This continues until write #17, which will again be the first write to a virgin chunk. So, we see that 64 kB / 4 kB = 16, which means that only one write out of 16 causes a COFW. If the I/O size was 8 kB, every 8th write would cause a COFW, so we'd divide the number of writes by 8.

For LUN 2, then, we have 125 writes/s / 16 [because it's sequential, and 64 kB/4 kB] x 4 [4 writes to the RL] x 2 [the write penalty for R1 or R1/0] = 63 disk IOPs.

LUN 3 uses R5, so we have 80 writes/s x 4 writes/COFW x 4 [write penalty for R5] = 1280 disk IOPs.

You need the R/W ratio if you're given the number of IOPs and the R/W ratio. As examples, 500 IOPs with a R/W ratio of 3:1 gives you 125 writes/s, whereas 500 IOPs with a R/W ratio of 4:1 gives you 100 writes/s. You need the ratio of writes to total IOPs, so it's (writes / (reads + writes)).

andre_rossouw

62 Posts

0

June 20th, 2013 13:00

That's correct.

paul881

33 Posts

0

June 20th, 2013 13:00

Thanks for the explanation.

I just wanted to confirm that I understood you correctly. Please verify that this is correct

So, in the case of LUN 2 : 500 sequential 4 kib IOPs, 3:1 R/W,

We have 3:1 R/W which is 25% of 500 = 125 writes

In the case of LUN 3 : 400 Random 8 kib IOPS , 4:1 R/W,

We have 4:1 R/W which is 20% of 400 = 80 writes

Thanks again for your help.

paul881

33 Posts

0

June 26th, 2013 05:00

Hi Andre,

I just read your paper on Choosing the Right CLARiiON® Data Replication Method: A Performance-Based Approach and it is a great paper !

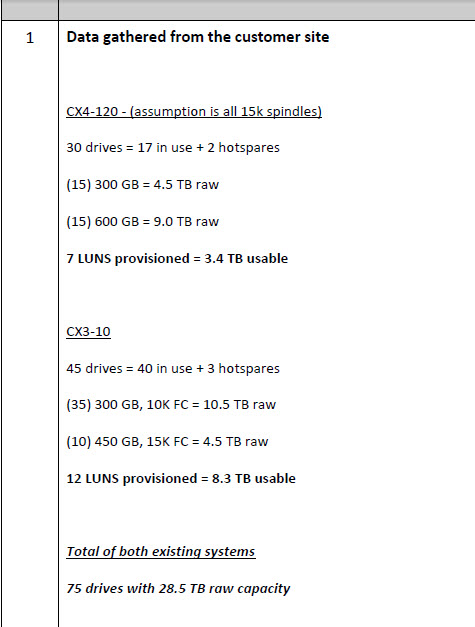

I am finishing my e20-324 videoILT course however I can't figure out the solutions on lab 9: Consolidation case study.

I suppose I am overwhelmed with the information provided by the question hence I am not really sure which are the pertinent pieces of information that I have to use.

Appreciate if you can help me with this question.

The solution is the following:

Proposed configuration

Primary array

VNX5300 DPE with 8 x 3.5 inch 600 GB 10 k rpm SAS drives

additional drives - (6) 3.5 inch 600 GB 10 k rpm SAS drives

- (12) 3.5 inch 2 TB 7.2 k rpm NL-SAS drives

hot spares - (1) 3.5 inch 600 GB 10 k rpm SAS

(1) 3.5 inch 2 TB 7.2 k rpm NL-SAS

dual control stations

Local and remote protection suites

Note 1 : amounts below does NOT include the systems drives (the first four drives in enclosures 0_0)

est. usable capacity = 17.01 TB

est. checkpoint/snapshot capacity of 5% = 970 GB

est. rule of thumb performance = 2760 backend IOPS

Note 2 : amounts below DO include the systems drives (the first four drives in enclosures 0_0)

est. usable capacity = 18.58 TB

est. checkpoint/snapshot capacity of 5% = 980 GB

est. rule of thumb performance = 3320 backend IOPS

Note 3 : amounts below does NOT include the systems drives (the first four drives in enclosures 0_0)

est. usable capacity = 11.78 TB

est. rule of thumb performance = 1200 backend IOPS

Note 4 : amounts below DO include the systems drives (the first four drives in enclosures 0_0)

est. usable capacity = 13.35 TB

est. rule of thumb performance = 1760 backend IOPS

Q1. How did the solution arrived at the number of drives in the primary array :

Primary array

VNX5300 DPE with 8 x 3.5 inch 600 GB 10 k rpm SAS drives

additional drives - (6) 3.5 inch 600 GB 10 k rpm SAS drives................... and the rest of it.

Q2. Note 1 : how is 17.01 TB and the 2760 IOPs obtained ? I added up all the disks capacities in the primary array and is no where near this figure. How did they get the IOPs ?

Q3. Note 2 : 18.58 TB, 3320 IOPs ? I use the VNX capacity calculator and this is the capacity I obtained :

But I am not sure if I am doing this right.

Q4. Is the checkpoint / snapshot capacity usually 5% ?

Thank you for your help

andre_rossouw

62 Posts

0

June 26th, 2013 07:00

The solution uses 15 SAS drives and 13 NL-SAS drives. One of each type is used as a hot spare, and in this first example we'll ignore the system drives.

We therefore have 10 SAS drives and 12 NL-SAS drives. If the SAS drives are configured as 4+1 R5, we'll have 1400 disk IOPs and 8x 536 GB = 4288 GB. If the NL-SAS drives are configured as 4+2 R6, we have 960 disk IOPs and 8x 1834 GB = 14672 GB for a total of 2360 disk IOPs and 18960 GB usable. Varying he configuration, e.g. by using 10+2 R6 for the NL-SAS disks, will give different answers.

From the VM numbers, we have 323 reads and 174 writes [LUN reads and writes]. To convert to the required disk IOPs, you need to know the RAID type. Assuming only R5, we'll need 323 + (4 x 174) = 1019 disk IOPs. The SQL host shows 902 reads and 88 writes, so we'd need 902 + (4 x 88) = 1254 disk IOPs. The total for all hosts is 2273 disk IOPs, so we'd have to design around that.

In the example you show, you've used 22 SAS and 15 NL-SAS drives, which is not what the solution specified. Your answers are different because of that.

5% is a rough rule of thumb for snapshot calculations - we assume [until real data is found] that about 5% of the source data will change while the snapshot is active.

paul881

33 Posts

0

June 26th, 2013 09:00

Thank you for the explanation.

You replied that the solution uses 15 SAS and 13 NL-SAS drives , one of each type is for HS.

(4+1)R5 for SAS and (4+2) R6 for NL-SAS

After that the calculations were based on 10 SAS drives and 12 NL-SAS , should it not be 14 SAS instead ( 15-1 HS)

Q1. I am not sure why you 8 x 536( formatted capacity) =4288 GB, how did you get 8 ? Should it not be 14 SAS x 536 ?

14 x 536 = 7504 * [(n-1)/n] , therefore usable = 7003 GB.

Q2. Similarly, how did you 8 x 1834 , how did you get 8 ? Should it not be 12 NL-SAS x 1834 ?

12 x 1834 = 22008 * [(n-2)/n], usable = 18339 GB

Q3. I know that a 600 GB drive has a formatted capacity of 537 GB ( found this in the OE 31.5 best practices), so I use a factor of 1.11732.

If I take 2TB/1.11732 that should give me 1790 for the formatted capacity instead of 1834, is this alright ?

Thanks once again.

andre_rossouw

62 Posts

0

June 26th, 2013 10:00

As I said in my previous answer, I ignored the 4 system drives. That leaves 10 SAS drives, which would normally be configured as 2x 4+1 R5. In 1 4+1 R5 group, only the equivalent of 4 drives hold user data, while the remaining drive holds parity information. For 2x 4+1 groups, that gives 8 data drives, hence the 8x 536 GB.

The same is true of the NL-SAS drives. My answer used 2x 4+2 R6, so there are also 8 data drives, giving the answer you can find above.

I looked at a VNX system to get the drive capacities I used, so I must assume they are correct. EMC has a document that lists drive types and capacities, and it's better to use that than to try to calculate usable capacity based on the manufacturer's published capacity.

In the examples you showed, n refers to the number of drives in the RAID Group, not the number of drives in the VNX. For a 4+2 R6 group, the usable capacity with 2 TB drives is 6 * 1834 * ((6-2) / 6) = 1834 * 4 = 7336 GB. Two of those give you 14672 GB - the answer I showed above.