Bienvenue

Bienvenue dans l’univers Dell

- Passer des commandes rapidement et facilement

- Afficher les commandes et suivre l’état de votre expédition

- Profitez de récompenses et de remises réservées aux membres

- Créez et accédez à une liste de vos produits

- Gérer vos sites, vos produits et vos contacts au niveau des produits Dell EMC à l’aide de la rubrique Gestion des informations de l’entreprise.

Numéro d’article: 000132886

Dell EMC DSS 8440 Server Powered by NVIDIA RTX GPUs for HPC and AI Workloads

Résumé: The Dell EMC DSS8440 server is a 2 Socket, 4U server designed for High Performance Computing, Machine Learning (ML) and Deep Learning workloads. This article compares the performance of various GPUs such as NVIDIA Volta V100S and NVIDIA Tesla T4 Tensor Core GPUs as well as NVIDIA quadro RTX GPUs in this system. ...

Contenu de l’article

Symptômes

Deepthi Cherlopalle and Frank Han

Dell EMC HPC and AI Innovation Lab June 2020

The Dell EMC DSS8440 server is a 2 Socket, 4U server designed for High Performance Computing, Machine Learning (ML) and Deep Learning workloads. It supports various GPUs such as NVIDIA Volta V100S  and NVIDIA Tesla T4

and NVIDIA Tesla T4  Tensor Core GPUs as well as NVIDIA quadro RTX GPUs

Tensor Core GPUs as well as NVIDIA quadro RTX GPUs  .

.

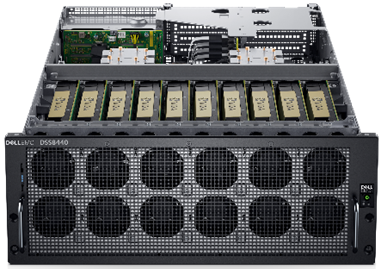

(Figure.1 Dell EMC DSS840 Server)

In this blog, we evaluate the performance of the cost-effective NVIDIA Quadro RTX 6000 and the NVIDIA Quadro RTX 8000 GPUs compared to the top tier accelerator V100S GPU by using various industry standard benchmarking tools. This includes testing against single vs double precision workloads. While the Quadro series has existed for a long time, RTX GPUs with NVIDIA Turing Architecture launched in late 2018. The specifications in Table 1 show that the RTX 8000 GPU is superior to the RTX 6000 in terms of higher memory configuration. However, the RTX 8000 and RTX 6000 GPUs have higher power needs compared to the V100S GPU. For workloads that require a higher memory capacity, the RTX 8000 is the better choice.

| SPECIFICATIONS | RTX 6000 | RTX 8000 | V100S-32 GB |

|---|---|---|---|

| Architecture | Turing | Volta | |

| Memory | 24 GB GDDR6 | 48 GB GDDR6 | 32 GB HBM2 |

| Default clock rate (MHz) | 1395 | 1245 | |

| GPU Maximum clock rate (MHz) | 1770 | 1597 | |

| CUDA Cores | 4608 | 5120 | |

| FP32(TFLOPS maximum) | 16.3 | 16.4 | |

| Memory Bandwidth (GB/s) | 672 | 1134 | |

| Power | 295 W | 250 W | |

Table.1 GPU Specifications

| Server | DellEMC PowerEdge DSS8440 | ||

|---|---|---|---|

| Processor | 2 x Intel Xeon 6248, 20 C @ 2.5 GHz | ||

| Memory | 24 x 32 GB @ 2933 MT/s (768 GB Total) | ||

| GPU | 8 x Quadro RTX 6000 | 8 x Quadro RTX 8000 | 8 x Volta V100S - PCIe |

| Storage | 1 x Dell Express Flash NVMe 1 TB 2.5" U.2 (P4500) | ||

| Power Supplies | 4 x 2400 W | ||

Table.2 Server configuration details

| BIOS | 2.5.4 |

|---|---|

| OS | RHEL 7.6 |

| Kernel | 3.10.0-957,ek7.x86_64 |

| System Profile | Performance Optimized |

| CUDA Toolkit CUDA Driver |

10.1 440.33.01 |

Table.3 System Firmware details

| Application | Version |

|---|---|

| HPL | hpl_cuda_10.1_ompi-3.1_volta_pascal_kepler_3-14-19_ext Intel MKL 2018 Update 4 |

| LAMMPS | March 3 2020 OpenMPI – 4.0.3 |

| MLPERF | v0.6 Training  docker 19.03 |

Table.4 Application Information

Cause

LAMMPS

LAMMPS  is a Molecular Dynamics application that is maintained by researchers at Sandia National Laboratories and Temple University. LAMMPS was compiled with the KOKKOS package

is a Molecular Dynamics application that is maintained by researchers at Sandia National Laboratories and Temple University. LAMMPS was compiled with the KOKKOS package  to run efficiently on NVIDIA GPUs. Lennard Jones dataset was used for performance comparison and Timesteps/s being the metric as shown in Figure 2:

to run efficiently on NVIDIA GPUs. Lennard Jones dataset was used for performance comparison and Timesteps/s being the metric as shown in Figure 2:

(Figure.2 Lennard Jones Graph)

As listed in Table 1, the RTX 6000 and RTX 8000 GPUs have the same number of cores, single precision performance and GPU bandwidth but different GPU memory. Because both RTX GPUs have a similar configuration the performance is also in the same range. RTX GPUs scale well for this application and the performance for both the GPUs is identical.

The Volta V100S GPU performance is approximately three times faster than the Quadro RTX GPUs. The key factor for this higher performance is the greater GPU memory bandwidth of the V100S GPU.

High Performance Linpack (HPL) Performance

HPL is a standard HPC benchmark that measures computing performance. It is used as a reference benchmark by the TOP500 list to rank supercomputers worldwide.

The following figure shows the performance of RTX 6000, RTX 8000, and V100S GPUs using DSS 8440 server. As you can see, the performance of the RTX GPUs are significantly lower than the V100S GPU. This is to be expected as the HPL performs a matrix LU factorization which is primarily double precision floating point operations.

(Figure.3 HPL Performance with different GPUs)

If we compare the theoretical floating-point performance, that is, Rpeak of both GPUs, we see that the V100S GPU performance is much higher. The theoretical Rpeak value on a single RTX GPU is approximately 500GFlops. This value yields less performance (Rmax) per GPU. The Rpeak value for Volta V100S GPU is 8.2TFlops, which results in much higher performance from each card.

MLPerf

The need for industry-standard performance benchmarks for ML led to the development of the MLPerf suite. This suite includes benchmarks for evaluating training and inference performance of ML hardware and software.This section only addresses the training performance of GPUs. The following table lists the Deep Learning workloads, datasets, and target criteria that is used for evaluating the GPUs.

| Benchmark | Dataset | Quality Target | Reference Implementation Model |

|---|---|---|---|

| Image classification | ImageNet (224x224) | 75.9% Top-1 Accuracy | Resnet-50 v1.5 |

| Object detection (light weight) |

COCO 2017 | 23% mAP | SSD-ResNet34 |

| Object detection (heavy weight) |

COCO 2017 | 0.377 Box minimum AP 0.339 Mask minimum AP |

Mask R-CNN |

| Translation (recurrent) |

WMT English-German | 24.0 BLEU | GNMT |

| Translation (non-recurrent) |

WMT English-German | 25.0 BLEU | Transformer |

| Reinforcement learning | N/A | Pre-trained checkpoint | Mini Go |

Table.5 MLPerf datasets and target criteria (Source:https://mlperf.org/training-overview/#overview  )

)

The following figure shows the time to meet the target criteria for both the RTX and V100S GPUs:

(Figure.4 MLPERF Performance)

The results are considered after performing multiple runs, discarding the highest and lowest value, and averaging the other runs as per the guidelines listed. The performance for both the RTX GPUs is similar. The percentage of variance between both the RTX GPUs is minimal and within the acceptance range according to MLPerf guidelines. While Volta V100 GPU provides the best performance, the RTX GPUs also perform well except for object detection benchmark.

At the time of publication, the Image classification benchmark in MLPerf failed with RTX GPUs due to a convolution error. This issue is expected to be fixed in a future cuDNN release.

Résolution

Summary

In this blog, we discussed the performance of the Dell EMC DSS 8440 GPU Server and NVIDIA RTX GPUs for HPC and AI workloads. Performance for both RTX GPUs is similar, however , the RTX 8000 GPU would be a best choice for applications that require a higher amount of memory. For double precision workloads, or workloads that require high memory bandwidth Volta V100S and the new NVIDIA A100 GPU are the best choice.

In the future, we plan to provide a performance study on RTX GPUs with other single precision applications and an Inference study on RTX and A100 GPUs.

Propriétés de l’article

Produit concerné

High Performance Computing Solution Resources

Dernière date de publication

25 févr. 2021

Version

4

Type d’article

Solution