Dell EMC Ready Solutions for HPC BeeGFS High Capacity Storage

Summary: The Dell EMC Ready Solutions for HPC BeeGFS High Capacity Storage is a fully supported, high-throughput parallel file system storage solution. This architecture emphasized performance, and the solution described here is a high capacity storage solution. These two solutions for BeeGFS are different in terms of their design goals and use cases. The high-performance solution is designed as a scratch storage solution, a staging ground for transient datasets which are usually not retained beyond the lifetime of the job. The high capacity solution uses 4x Dell EMC PowerVault ME4084 arrays fully populated with a total of 336 drives and provides a raw capacity of 4PB if equipped with 12 TB SAS drives. ...

Symptoms

This article was written by Nirmala Sundararajan, HPC and AI Innovation Lab, April 2020

Cause

Table of Contents:

- Introduction

- Solution Reference Architecture

- Hardware and Software Configuration

- Solution Configuration Details

- Performance Evaluation

- Conclusion and Future Work

Introduction

The Dell EMC Ready Solutions for HPC BeeGFS High Capacity Storage is a fully supported, high-throughput parallel file system storage solution. This blog discusses the solution architecture, how it is tuned for HPC performance and presents I/O performance using both IOZone sequential and random benchmarks. A BeeGFS high-performance storage solution built on NVMe devices was described in this blog published during Nov 2019. That architecture emphasized performance, and the solution described here is a high capacity storage solution. These two solutions for BeeGFS are different in terms of their design goals and use cases. The high-performance solution is designed as a scratch storage solution, a staging ground for transient datasets which are usually not retained beyond the lifetime of the job. The high capacity solution uses 4x Dell EMC PowerVault ME4084 arrays fully populated with a total of 336 drives and provides a raw capacity of 4PB if equipped with 12 TB SAS drives.

Resolution

Solution Reference Architecture

The Dell EMC Ready Solution for HPC BeeGFS High Capacity Storage consists of a management server, a pair of metadata servers, a pair of storage servers and the associated storage arrays. The solution provides storage that uses a single namespace that is easily accessed by the cluster’s compute nodes. The following figure shows the solution reference architecture with these primary components:

- Management Server

- Metadata Server pair with PowerVault ME4024 as back-end storage

- Storage Server pair with PowerVault ME4084 as back-end storage

Figure 1 shows the reference architecture of the solution.

Figure 1: Dell EMC Ready Solution for HPC BeeGFS Storage

In Figure 1, the management server running the BeeGFS monitoring daemon is a PowerEdge R640. The two MetaData Servers (MDS) are PowerEdge R740 servers in an active-active high availability configuration. The MDS pair is connected to the 2U, PowerVault ME4024 array by 12 Gb/s SAS links . The ME4024 storage array hosts the MetaData Targets (MDTs). Another pair of PowerEdge R740 servers, also in active-active high availability configuration, are used as Storage Servers (SS). This SS pair is connected to four fully populated PowerVault ME4084 storage arrays using 12 Gb/s SAS links. The ME4084 arrays support a choice of 4 TB, 8 TB, 10 TB, or 12 TB NL SAS 7.2 K RPM hard disk drives (HDDs and host the Storage Targets (STs) for the BeeGFS file system.This solution uses Mellanox InfiniBand HDR100 for the data network. The clients and servers are connected to the 1U Mellanox Quantum HDR Edge Switch QM8790, which supports up to 80 ports of HDR100 by using HDR splitter cables.

Hardware and Software Configuration

The following tables describe the hardware speficiations and software versions validated for the solution.

| Management Server | 1x Dell EMC PowerEdge R640 |

|---|---|

| Metadata Servers (MDS) | 2x Dell EMC PowerEdge R740 |

| Storage Servers (SS) | 2x Dell EMC PowerEdge R740 |

| Processor | Management Server: 2 x Intel Xeon Gold 5218 @ 2.3GHz, 16 cores MDS and SS: 2x Intel Xeon Gold 6230 @ 2.10 GHz, 20 cores |

| Memory | Management Server: 12 x 8GB DDR4 2666MT/s DIMMs - 96GB MDS and SS: 12x 32GB DDR4 2933MT/s DIMMs - 384GB |

| InfiniBand HCA (Slot 8) | 1x Mellanox ConnectX-6 Single Port HDR100 Adapter per MDS and SS |

| External Storage Controllers | 2x Dell 12Gbps SAS HBAs (on each MDS) 4x Dell 12Gbps SAS HBAs (on each SS) |

| Data Storage Enclosure | 4x Dell EMC PowerVault ME4084 enclosures fully populated with a total of 336 drives 2.69 PB raw storage capacity if equipped with 8TB SAS drives in 4x ME4084 |

| Metadata Storage Enclosure | 1x Dell EMC PowerVault ME4024 enclosure fully populated with 24 drives |

| RAID Controllers | Duplex RAID controllers in the ME4084 and ME4024 enclosures |

| Hard Disk Drives | 84 - 8TB 7200 RPM NL SAS3 drives per ME4084 enclosure 24 - 960GB SAS3 SSDs per ME4024 enclosure |

| Operating System | CentOS Linux release 8.1.1911 (Core) |

| Kernel version | 4.18.0-147.5.1.el8_1.x86_64 |

| Mellanox OFED version | 4.7-3.2.9.0 |

| Grafana | 6.6.2-1 |

| InfluxDB | 1.7.10-1 |

| BeeGFS File system | 7.2 beta2 |

Table 1: Testbed Configuration

Note: For the purpose of performance characterization, BeeGFS version 7.2 beta2 has been used.

Solution Configuration Details

The BeeGFS architecture consists of four main services:

- Management Service

- Metadata Service

- Storage Service

- Client Service

There is also an optional BeeGFS Monitoring Service.

Except for the client service which is a kernel module, the management, metadata and storage services are user space processes. It is possible to run any combination of BeeGFS services (client and server components) together on the same machines. It is also possible to run multiple instances of any BeeGFS service on the same machine. In the Dell EMC high capacity configuration of BeeGFS, the monitoring service runs on the management server, multiple instances of metadata service run on the metadata servers and a single instance of storage service runs on storage servers. The management service is installed on the metadata servers.

Monitoring Service

The BeeGFS monitoring service (beegfs-mon.service) collects BeeGFS statistics and provides them to the user, using the time series database InfluxDB. For visualization of data, beegfs-mon-grafana provides predefined Grafana dashboards that can be used out of the box. Figure 2 provides a general overview of the BeeGFS cluster showing the number of storage services and metadata services in the setup (referred to as nodes in the dashboard). It also lists the other dashboard views available and gives an overview of the storage targets.

Figure 2 Grafana Dashboard - BeeGFS Overview

Metadata Service

The ME4024 storage array used for metadata storage is fully populated with 24x 960GB SSDs. These drives are configured in 12x Linear RAID1 disk groups of two drives each as shown in Figure 3. Each RAID1 group is a MetaData target.

Figure 3 Fully Populated ME4024 array with 12 MDTs

Within BeeGFS, each metadata service handles only a single MDT. Since there are 12 MDTs, there needs to be 12 instances of the metadata service. Each of the two metadata servers run six instances of the metadata service. The metadata targets are formatted with an ext4 file system (ext4 file systems perform well with small files and small file operations). Additionally, BeeGFS stores information in extended attributes and directly on the inodes of the file system to optimize performance, both of which work well with ext4 file system.

Back to Top

Management Service

The beegfs-mgmtd service is setup on both metadata servers. The beegfs mgmtd store is initialized in the directory mgmtd on metadata target 1 as shown below:

/opt/beegfs/sbin/beegfs-setup-mgmtd -p /beegfs/metaA-numa0-1/mgmtd -S beegfs-mgmt

The management service is started on metaA server.

Storage Service

In this high capacity BeeGFS solution the data storage is across four PowerVault ME4084 Storage arrays. Linear RAID-6 disk groups of 10 drives (8+2) each are created on each array. A single volume using all the space is created for every disk group. This will result in 8 disk groups/volumes per array. Each array has 84 drives and creating 8 x RAID-6 disk groups leaves 4 drives which can be configured as global hot spares across the array volumes.

With the layout described above, there are a total of 32 x RAID-6 volumes across 4 x ME4084 in a base configuration shown in Figure 1. Each of these RAID-6 volumes is configured as a Storage Target (ST) for the BeeGFS file system, resulting in a total of 32 STs across the file system.

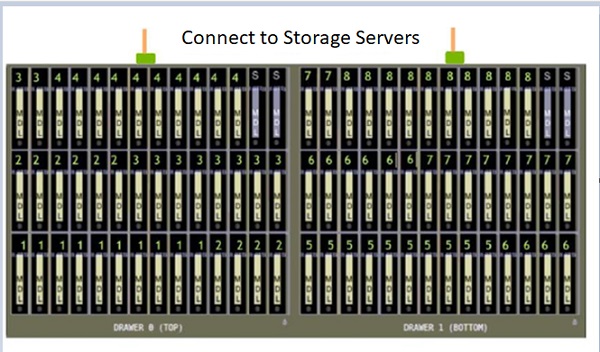

Each ME4084 array has 84 drives, with drives numbered 0-41 in the top drawer and those numbered 42-84 in the bottom drawer. In Figure 5, each set of 10 drives marked 1 to 8 represent the 8xRAID6 group. One volume is created out of each RAID6 group. The drives marked "S" represent the global spares. Figure 5 shows the front view of the array after configuration of 8 volumes and 4 global spares.

Figure 4 RAID 6 (8+2) disk group layout on one ME4084

Client Service

The BeeGFS client module is loaded on all the hosts which require access to the BeeGFS file system. When the BeeGFS module is loaded and the beegfs-client service is started, the service mounts the file systems defined in the /etc/beegfs/beegfs-mounts.conf file instead of the usual approach based on /etc/fstab. With this approach the beegfs-client starts like any other Linux service through the service startup script, and enables the automatic recompilation of the BeeGFS client module after system updates..

Performance Evaluation

This section presents the performance characteristics of the Dell EMC Ready Solutions for HPC BeeGFS High Capacity Storage Solution using the IOzone sequential and random benchmarks. For further performance characterization using IOR and MDtest and details regarding the configuration of high availability, please look for a white paper that will be published later.

The storage performance was evaluated using the IOzone benchmark (v3.487). The sequential read and write throughput, and random read and write IOPS were measured. Table 2 describes the configuration of the PowerEdge R840 servers used as BeeGFS clients for these performance studies.

| Clients | 8x Dell EMC PowerEdge R840 |

|---|---|

| Processor | 4 x Intel(R) Xeon(R) Platinum 8260 CPU @ 2.40GHz, 24 cores |

| Memory | 24 x 16GB DDR4 2933MT/s DIMMs - 384GB |

| Operating System | Red Hat Enterprise Linux Server release 7.6 (Maipo) |

| Kernel Version | 3.10.0-957.el7.x86_64 |

| Interconnect | 1x Mellanox ConnectX-6 Single Port HDR100 Adapter |

| OFED Version | 4.7-3.2.9.0 |

Table 2 Client Configuration

The servers and clients are connected over an HDR100 network and the network details provided in Table 3 below:

| InfiniBand Switch | QM8790 Mellanox Quantum HDR Edge Switch - IU with 80x HDR 100 100Gb/s ports (using splitter cables) |

|---|---|

| Management Switch | Dell Networking S3048-ON ToR Switch -1U with 48x 1GbE, 4x SFP+ 10GbE ports |

Table 3: Networking

Sequential Reads and Writes N-N

The Sequential reads and writes were measured using the sequential read and write mode of IOzone. Tests were carried out with multiple thread counts starting at 1 thread and increasing in powers of 2, up to 512 threads. At each thread count, an equal number of files were generated since this test works on one file per thread or the N-N case. The processes were distributed across 8 physical client nodes in a round-robin manner so that the requests were equally distributed with load balancing.

For thread counts 16 and above an aggregate file size of 8TB was chosen to minimize the effects of caching from the servers as well as from BeeGFS clients. For thread counts below 16, the file size is 768 GB per thread (i.e. 1.5TB for 2 threads, 3TB for 4 threads and 6TB for 8 threads). Within any given test, the aggregate file size used was equally divided among the number of threads. A record size of 1MiB was used for all runs. The command used for Sequential N-N tests is given below:

Sequential Writes and Reads: iozone -i $test -c -e -w -r 1m -s $Size -t $Thread -+n -+m /path/to/threadlist

OS caches were also dropped on the servers between iterations as well as between write and read tests by running the command:

# sync && echo 3 > /proc/sys/vm/drop_caches

The file system was unmounted and remounted on the clients between iterations and between write and read tests to clear the cache.

Figure 5 N-N Sequential Performance

In Figure 5, peak throughput of 23.70 GB/s is attained at 256 threads and the peak write of 22.07 GB/s attained at 512 threads. The single thread write performance is 623 MB/s and read is 717 MB/s. The performance scales almost linearly up to 32 threads. After this, we see that reads and writes saturate as we scale. This brings us to understand that the overall sustained performance of this configuration for reads is ≈ 23GB/s and that for the writes is ≈ 22GB/s with the peaks as mentioned above. The reads are very close to or slightly higher than the writes, independent of the number of the threads used.

Random Reads and Writes N-N

IOzone was used in the random mode to evaluate random IO performance. Tests were conducted on thread counts from 16 to 512 threads. Direct IO option (-I) was used to run IOzone so that all operations bypass the buffer cache and go directly to the disk. BeeGFS stripe count of 1 and chunk size of 1MB was used. The request size was set to 4KiB. Performance was measured in I/O operations per second (IOPS). The OS caches were dropped between the runs on the BeeGFS servers. The file system was unmounted and remounted on clients between iterations of the test. The command used for random read and write tests is as follows:

iozone -i 2 -w -c -O -I -r 4K -s $Size -t $Thread -+n -+m /path/to/threadlist

Figure 6 N-N Random Performance

Figure 6 shows that the write performance reaches around 31K IOPS and remains stable from 32 threads to 512 threads. In contrast, the read performance increases with the increase in the number of IO requests with a maximum performance of around 47K IOPS at 512 threads, which is the maximum number of threads tested for the solution. ME4 requires a higher queue depth to reach the maximum read performance and the graph indicates that we can get a higher performance if we run the 1024 concurrent threads. However, as the tests were run only with 8 clients, we did not have enough cores to run the 1024 thread count.

Back to Top

Tuning Parameters Used

The following tuning parameters were in place while carrying out the performance characterization of the solution.

The default stripe count for BeeGFS is 4. However, the chunk size and the number of targets per file (stipe count) can be configured on a per-directory or per-file basis. For all these tests, BeeGFS stripe size was set to 1MB and stripe count was set to 1 as shown below:

$beegfs-ctl --getentryinfo --mount=/mnt/beegfs/ /mnt/beegfs/benchmark/ --verbose

Entry type: directory

EntryID: 1-5E72FAD3-1

ParentID: root

Metadata node: metaA-numa0-1 [ID: 1]

Stripe pattern details:

+ Type: RAID0

+ Chunksize: 1M

+ Number of storage targets: desired: 1

+ Storage Pool: 1 (Default)

Inode hash path: 61/4C/1-5E72FAD3-1

The transparent huge pages were disabled, and the following virtual memory settings configured on the metadata and storage servers:

- vm.dirty_background_ratio = 5

- vm.dirty_ratio = 20

- vm.min_free_kbytes = 262144

- vm.vfs_cache_pressure = 50

The following tuning options were used for the storage block devices on the storage servers.

- IO Scheduler deadline : deadline

- Number of schedulable requests : 2048

- Maximum amount of read ahead data :4096

In addition to the above the following BeeGFS specific tuning options were used:

beegfs-meta.conf

connMaxInternodeNum = 64

tuneNumWorkers = 12

tuneUsePerUserMsgQueues = true # Optional

tuneTargetChooser = roundrobin (benchmarking)

beegfs-storage.conf

connMaxInternodeNum = 64

tuneNumWorkers = 12

tuneUsePerTargetWorkers = true

tuneUsePerUserMsgQueues = true # Optional

tuneBindToNumaZone = 0

tuneFileReadAheadSize = 2m

beegfs-client.conf

connMaxInternodeNum = 24

connBufSize = 720896

Conclusion and Future Work

This blog announces the release of Dell EMC BeeGFS High Capacity Storage Solution and highlights its performance characteristics. This solution provides a peak performance of 23.7 GB/s for reads and 22.1 GB/s for writes using IOzone sequential benchmarks. We also see the random writes peak at 31.3K IOPS and random reads at 47.5K IOPS.

As part of the next steps, we are going to evaluate the metadata performance and N threads to a single file (N to 1) IOR performance of this solution. A white paper describing the metadata and IOR performance of the solution with additional details regarding the design considerations for this high capacity solution with high availability is expected to be published after the validation and evaluation process is complete.