The Israel-1 System

Israel-1 is a collaboration between Dell Technologies and NVIDIA to build a large-scale, state-of-the-art artificial intelligence (AI)/machine learning (ML) facility located in NVIDIA’s Israeli data center. Israel-1 will feature 2,048 of the latest NVIDIA H100 Tensor Core GPUs using 256 Dell PowerEdge XE9680 AI servers and a large NVIDIA Spectrum-X Ethernet AI network fabric featuring Spectrum-4 switches and NVIDIA BlueField-3 SuperNICs. Once completed, it will be one of the fastest AI systems in the world, serving as a blueprint and testbed for the next generation of large-scale AI clusters. The system will be used to benchmark AI workloads, analyze communications patterns for optimization and develop best practices for using Ethernet as an AI fabric.

In the Israel-1 system, each Dell PowerEdge XE9680 is populated with two NVIDIA BlueField-3 DPUs, eight BlueField-3 SuperNICs and eight H100 GPUs. The H100 GPUs in each server are connected with a switched NVIDIA NVLink internal fabric that provides 900GB/s of GPU-to-GPU communication bandwidth. The Dell PowerEdge XE9680 servers are interconnected with Spectrum-X Ethernet AI infrastructure.

Each Spectrum-4 Ethernet switch provides 51.2 Tb/s of throughput and is deployed in conjunction with the 400 Gb/s BlueField-3 SuperNICs to interconnect the 2,048 GPUs in the system. Within each Dell PowerEdge XE9680, eight BlueField-3 SuperNICs are connected to the Spectrum-X host fabric and two BlueField-3 DPUs are connected to the storage, control and access fabric, as shown in Figure 1. The BlueField-3 SuperNIC is a new class of network accelerators, designed for network-intensive, massively parallel computing.

The combination of NVIDIA Spectrum-4 and BlueField-3 SuperNIC demonstrates the viability of a purpose-built Ethernet fabric for interconnecting the many GPUs needed for generative AI (GenAI) workloads. Solutions with a scale like Israel-1 are expected to become more and more common in the future. The Dell PowerEdge XE9680 can leverage any fabric technology to interconnect the hosts of such systems.

The Dell PowerEdge XE9680 Server

The Dell PowerEdge XE9680 is purpose-built for the most demanding AI/ML large models. It is the first Dell server platform equipped with eight GPUs connected through switched high-bandwidth NVLink interconnects. With its 10 available PCIe slots, the Dell PowerEdge XE9680 is uniquely positioned to be the platform of choice for AI/ML applications. Its innovative modular design is shown in Figure 2.

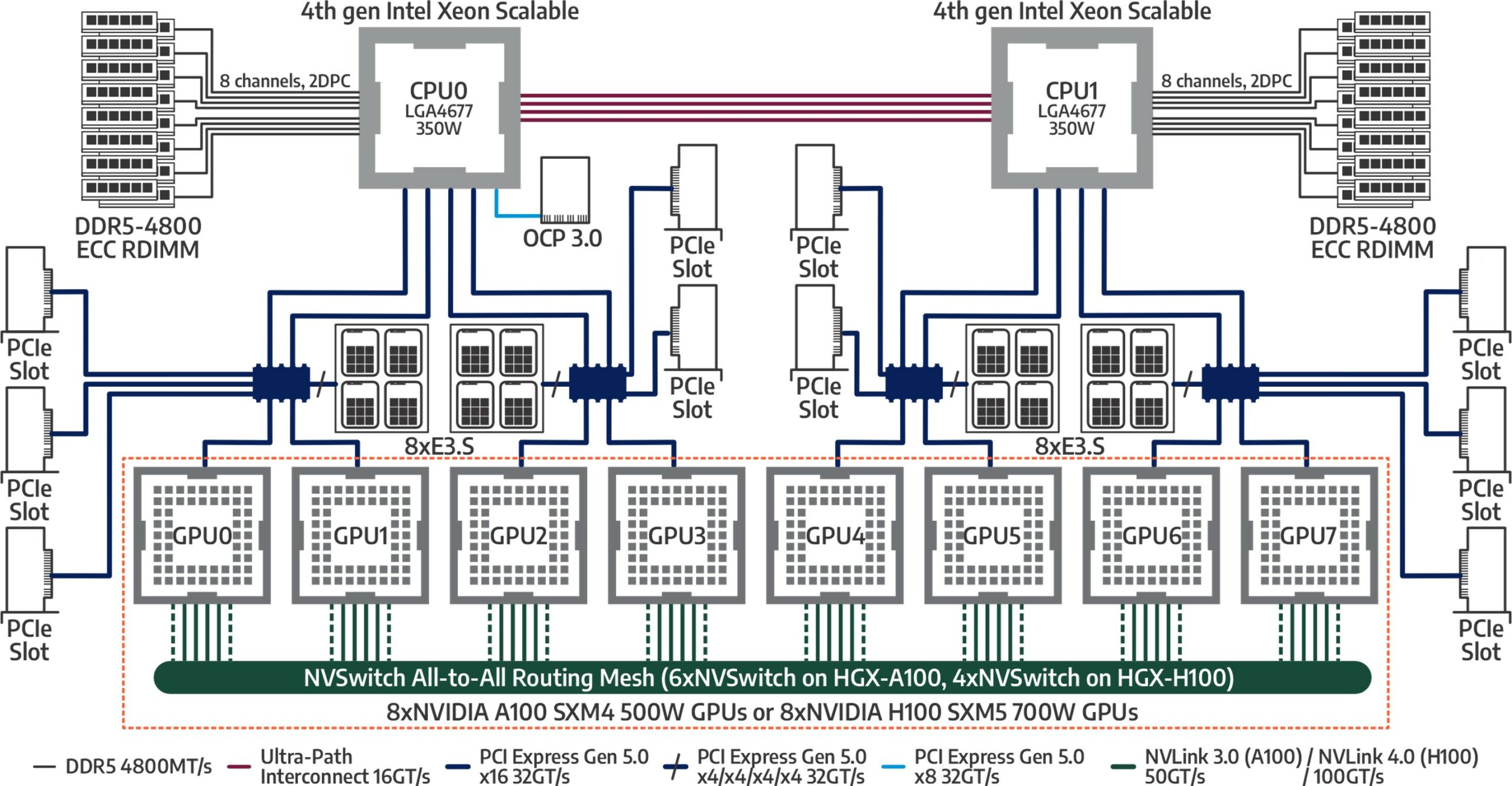

The top part of the chassis hosts the compute and memory subsystems, with two 4th Generation Intel Xeon Processors and up to 4TB of RAM. The bottom part of the chassis provides 10 PCIe Gen5 slots, able to accommodate up to 10 Gen5 PCIe full-height devices, and PCIe Gen5 connectivity to a variety of GPU modules, including the H100 GPU system used in the Israel-1 supercomputer. The Dell PowerEdge XE9680 H100 GPU system includes the NVLink switching infrastructure and is shown in Figure 3.

The internal PCIe architecture of the Dell PowerEdge XE9680 is designed with AI applications in mind. NVIDIA GPUs and software implement GPUDirect RDMA, which allows for GPUs on separate servers to communicate over an RDMA-capable network (such as RoCE) without host CPU involvement. This reduces the latency and improves throughput for GPU-to-GPU inter-server communications common in AI workloads. Figure 4 shows a detailed internal view of the Dell PowerEdge XE9680.

Each of the eight Dell PowerEdge XE9680 PCIe slots is designed with a direct connection to one of the eight GPUs through the set of embedded PCIe switches with x16 PCIe 5.0 lanes, each providing full 400 Gb/s bandwidth to each GPU pair. This allows efficient server-to-server communication for the GPUs through the BlueField-3 SuperNICs and delivers the highest throughput coupled with the lowest latency for GPUDirect and other RDMA communication.

Networking for AI

Networking is a critical component in building AI systems. With the emergence of AI models like large language models or multi-model GenAI, networking design and performance have taken center stage in determining AI workload performance. There are two main reasons to use network fabrics specifically designed for AI workloads:

- Communication between server nodes for parallel processing. As model size and data size grow, it takes more compute power to train a model. It is often necessary to employ parallelism, a technique that uses more than a single server to train a large model in a reasonable time. This requires highly effective data bandwidth with very short tail latency to exchange gradients across the servers. Typically, the larger the model being trained, the larger the amount of data needed to be exchanged at each iteration. This functionality is provided by Spectrum-X network fabric.

- Data access. Access to data is critical in the AI training process. Data is usually hosted on a central repository and accessed by the individual servers over the network. In large-model training, it is also necessary to save the state of training at periodic intervals. This process is referred to as checkpointing and is necessary to resume training in case there is a failure during the lengthy process. This function is usually provided by a storage network fabric to isolate the server-to-server communication network from the I/O access network and avoid having one type of traffic interfere with the other one, which would create more potential for congestion.

The design principle of the Israel-1 cluster takes these requirements into account, with eight BlueField-3 SuperNICs in each Dell PowerEdge XE9680 dedicated to server-to-server communications, plus two additional BlueField-3 DPUs handling the data storage I/O traffic, as well as acting as the control plane for the system.

The use of BlueField-3 SuperNICs, along with Spectrum-4 switches, is one of the most critical design points of the Israel-1 system. Since AI workloads exchange massive amounts of data across multiple GPUs, traditional Ethernet networks may suffer from congestion issues as the fabric scales. NVIDIA has incorporated innovative mechanisms such as lossless Ethernet, RDMA adaptive routing, noise isolation and telemetry-based congestion control using BlueField-3 SuperNICs and Spectrum-4 switches. These capabilities significantly improve AI workload scalability compared to standard Ethernet networks.

The Right Combination for a Large-Scale AI System

The combination of the Dell PowerEdge XE9680 AI server with its 10 PCIe Gen5 slots, the latest NVIDIA DPUs and SuperNICs, the NVIDIA HGX 8x H100 GPU module and Spectrum-X Ethernet for AI networks creates the ideal building blocks for AI systems. Dell is collaborating with NVIDIA on this system and looks forward to sharing the learnings and insights gathered from running and testing AI workloads on the system to help organizations build systems optimized for AI. Learn more about Dell’s AI offerings here.