tl;dr: Dell PowerScale will soon support Parallel NFS (pNFS), solving data bottlenecks that slow down AI workloads. This innovation enables clients to access data from multiple servers in parallel, delivering a dramatic boost in throughput and true linear scalability. It’s a seamless upgrade, requiring no client-side drivers for powerful results.

In the world of artificial intelligence, data bottlenecks are the enemy of innovation. As datasets grow and models become more complex, traditional storage systems struggle to keep up. This creates frustrating delays that slow down data ingestion, model training, and overall time to insight. To turn ambitious AI goals into reality, organizations need a storage foundation that scales performance as seamlessly as it scales capacity.

This week at SuperComputing (SC) 2025, Dell Technologies unveiled exciting new announcements for the Dell AI Factory. As a key component of the Dell AI Factory, we also announced innovations in our Dell AI Data Platform, one of which is the support for Parallel NFS (pNFS) with Flexible File Layout for Dell PowerScale. This new capability redefines storage performance, empowering you to unlock the full, parallel power of your infrastructure for the most demanding AI and analytics workloads.

The power of parallelism: What is pNFS?

Traditional NFS can create a performance bottleneck because it forces client communications through a single network endpoint. While effective for many uses, this serialized approach can limit the throughput required for large-scale AI operations, where speed is critical.

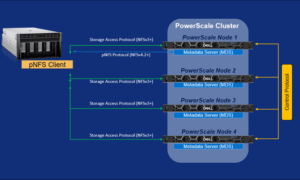

Parallel NFS (pNFS) fundamentally changes this dynamic. It works by separating the metadata path (information about the file) from the data path (the actual file contents). This architectural shift enables a much more efficient, parallel approach to data access. The result is a dramatic boost in throughput that accelerates your entire AI pipeline. PowerScale delivers its signature NAS simplicity with this new level of parallel performance, providing unified file and object data access and enterprise-grade resilience without operational complexity.

The pNFS process is designed for simplicity and maximum performance. By allowing clients to communicate with multiple PowerScale nodes at once, it unlocks the distributed power of the entire cluster.

Here’s how it works:

-

- Client-to-Cluster Communication: A client first contacts a metadata server on the PowerScale cluster to request information about a file’s location.

- Direct Data Access: The metadata server provides the client with a “layout,” which is a map detailing which nodes in the cluster hold the file’s data.

- Parallel Data Streams: Armed with this map, the client establishes direct and simultaneous connections to multiple data servers, allowing it to read or write data in parallel.

This move from a single, serialized stream to multiple parallel streams is what eliminates bottlenecks. It allows your clients to harness the full, aggregate performance of the PowerScale cluster, leading to faster data ingestion and accelerated access for high-throughput AI workloads.

Simplified Deployment, Powerful Results

Adopting new technology should empower your teams, not add complexity to your environment. We designed our pNFS integration to be as seamless as possible. Because pNFS with Flexible File Layout is natively supported in most modern Linux distributions, you do not need to install special software or custom drivers on your client machines.

This makes deployment fast and straightforward. Your teams can leverage the benefits of parallel I/O without the management overhead that often comes with specialized client-side software. You can focus on driving innovation, not on managing infrastructure.

Linear Scalability at the Client Level

Dell PowerScale clusters are already renowned for their linear scalability—add a node, and you add both performance and capacity. This scale-out architecture is designed to meet the needs of a multitude of clients and workflows, ensuring consistent performance across diverse use cases. However, AI workloads often demand something more: exceptional performance for a GPU compute client.

This is where pNFS truly shines. While traditional NFS can create bottlenecks by forcing all client communications through a single network endpoint, PowerScale with pNFS takes a different approach. By enabling a single client to establish parallel connections to multiple nodes in the cluster, pNFS delivers true linear scalability at the client level. This means that as you add nodes to your PowerScale cluster, a single client can harness the full, aggregate performance of the expanded infrastructure.

For AI workloads, where speed and throughput are critical, this capability is a game-changer. Whether you’re training complex models or processing massive datasets in real time, pNFS ensures that your infrastructure scales seamlessly to meet the demands of even the most performance-intensive applications. With PowerScale, you get the best of both worlds: scale-out storage for diverse workflows and unparalleled performance for single-client AI operations.

Meet our experts at SC 2025

Technology is at its best when it empowers people to achieve great things. This advancement in PowerScale is designed to do just that—providing a powerful, scalable, and efficient foundation for your AI and data analytics strategies, offering parallel performance with the simplicity of NAS.

If you are heading to SC 2025, be sure to visit the Dell Technologies booth to connect with our experts. This is your chance to meet our experts on-site, ask questions, and explore how our latest innovations can support your most ambitious projects. Let’s discover what’s possible, together.

Your guide to Dell AI Factory starts here.