At Dell Technologies, we believe technology should empower every organization and drive human progress. That’s why we’re announcing our latest collaboration with IBM, bringing the Granite 4.0 family of models to the Dell Enterprise Hub on Hugging Face.

This matters because enterprises are increasingly looking to run “open-source” models on-premises, and the Dell Enterprise Hub paired with the Dell AI Factory offers users a complete, validated path to secure, enterprise-grade AI deployment.

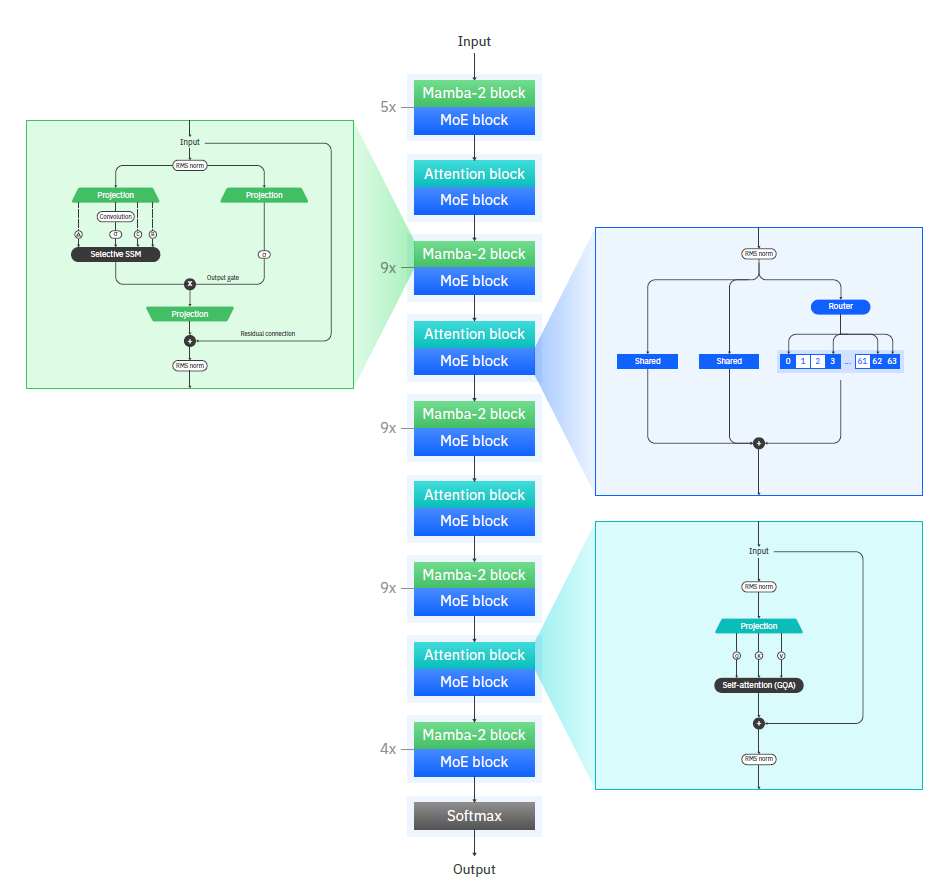

What’s new? The future is hybrid: Mamba2 meets transformer

IBM’s Granite 4.0 leverages a unique model architecture combining Mamba and transformer components to deliver the best of both worlds. Mamba’s efficiency handles the heavy lifting of understanding global context across long documents, while transformer blocks provide the finishing touch, refining local details with high precision.

This powerful combination delivers the long-context capability, speed and memory efficiency of Mamba with the proven accuracy of transformers in a resource-conscious design. This along with the Mixture of Experts (MoE) strategy for select Granite 4.0 models further reduces memory and compute footprint, ideal for enterprises.

Granite 4.0 on the Dell AI Factory: Innovation simplified

Advanced models are only as good as their accessibility. By offering Granite 4.0 through the Dell Enterprise Hub on our validated, full-stack Dell infrastructure, via the Dell AI Factory, we’re making cutting-edge AI enterprise-ready.

This collaboration has tangible benefits:

-

- Radical Efficiency: Based on this benchmark from IBM, comparing Granite 4.0 H Tiny 7B and Granite 3.3 8B – the memory efficiency is radical at higher context length and higher concurrency.

- Unlock Long-Context Power: There is a phenomenon called Context Rot, where transformer-based models drastically lose their accuracy at longer context. Because Mamba-2 models perform better at high context in terms of accuracy, this hybrid model architecture removes model-based context limitations.

- IBM Granite 4.0 models also implemented NoPE (No Positional Encoding), a concept in transformer models where explicit positional encodings are removed. Despite the absence of positional encodings, these models can still learn and infer positional information through other mechanisms within the network allowing it to perform better at long context.

- Drive Open Innovation: The open Apache 2.0 license empowers developers to freely customize, fine-tune and adapt the models for specific business needs, fostering a culture of open and collaborative enterprise AI.

Meet the Granite 4.0 family

The Dell Enterprise Hub provides access to a portfolio of Granite 4.0 models, each tailored to empower different enterprise applications:

-

- Granite 4.0 H Small: With 32 billion total parameters (9 billion active), this hybrid MoE model is a powerhouse for enterprise workloads that require deep contextual understanding of long and complex documents.

-

- Granite 4.0 H Tiny: An efficient hybrid MoE model with 7 billion total parameters (1 billion active), optimized for edge deployments and local experimentation. It brings powerful AI to where your data lives.

-

- Granite 4.0 H Micro: A compact 3-billion-parameter traditional dense model as an alternative where Mamba-2 support is not yet optimized.

Real-world impact, powered by Dell and IBM

The unique architecture of Granite 4.0 unlocks a new world of possibilities for your business.

-

- Deeper Document Insights: By maintaining accuracy at higher context the models go beyond simple summaries. Analyze lengthy legal contracts, complex financial reports, or dense technical manuals to uncover critical insights with unprecedented speed.

-

- Smarter RAG Systems: Maintaining accuracy at longer context is critical to Retrieval-Augmented Generation (RAG) based systems. By pairing with Granite Docling, users can build more grounded, efficient, and responsive systems.

-

- AI at the Edge: With models like Granite 4.0 Micro, users can deploy real-time AI directly on devices, independent of the cloud. This is a game-changer for industries like manufacturing, retail and logistics, where immediate, onsite intelligence is critical.

Dell and Hugging Face: Simplifying Enterprise AI

Through the Dell Enterprise Hub on Hugging Face, together we are making it easier than ever to deploy, manage and scale AI with confidence.

The Dell Enterprise Hub gives businesses access to pre-validated models optimized for Dell’s AI-ready infrastructure, powered by the latest NVIDIA GPUs. Enterprises can even use Dell Pro AI Studio to manage, deploy, and use Granite 4.0 H Small and Tiny on Dell Pro Max PCs via the Hub, making it easy to use Granite throughout your AI solutions.

Our comprehensive suite of tools, including the Dell AI CLI/Python SDK, Application Catalog and Model Catalog, removes friction from the development process. This complete, end-to-end solution—from hardware to software and support, accelerates your journey from concept to production.

By welcoming innovative models like IBM Granite 4.0 into this trusted ecosystem, we are empowering organizations like yours to build the future of AI on your own terms. Together, we can turn technological potential into human progress.

Learn more about how Dell Pro AI Studio is unlocking powerful on-device AI to your deskside with IBM Granite models.

Meet us at IBM TechXchange in Orlando, October 6-9, for a live Hybrid AI demo showcasing Granite 4.0 models in action and see how Dell AI Factory can accelerate your journey.